There’s a lot of advice on the internet. Some of it is good, some of it is terrible, and some sits in the gray area between.

There’s a lot of advice on the internet. Some of it is good, some of it is terrible, and some sits in the gray area between.

Within the fields of tech and startups, a lot of what people do day to day is influenced by what they’ve learned online; I doubt many people reading this article learned in school how to effectively market a product over Instagram!

Sorting the good from the bad is a challenge we all face, and one we have to become better at as individuals and as a society.

Improving our ability to analyze information doesn’t just mean identifying fake news, though we will look briefly at it. It also means being able to take a second look at informative journalism and the reporting of research; the kind of information which you might use to inform big business decisions. We’ll look at:

- The importance of recognizing the gray area in complex issues and reviewing the source text.

- How media reporting of studies can often obscure the real points

- Why certain models of investigation can have inherent flaws, and why you should be wary of that.

At the end I’ll follow up with the 10 step process you can use to improve your analysis. This process is pulled from the recommendations of Carl Sagan, Richard Feynman, and Michael Shermer, and repurposed for your professional needs.

But first, let me tell you a little story…

How poor research almost killed my first startup

A good number of years ago, a friend and I were working hard on bringing out our first app. It wasn’t my first project, but it was certainly my first real startup.

A good number of years ago, a friend and I were working hard on bringing out our first app. It wasn’t my first project, but it was certainly my first real startup.

We had a cursory knowledge of business and strategy but we knew less than we thought we knew. We were naive.

The app was Idyoma, and we were working on the MVP while gathering research and testing as many of our hypotheses as possible. So far, so good.

The app served to help language learners find others nearby to meet with to practice speaking and listening in another language. A kind of Tinder for languages.

To gather research, I would hold group language exchange meetings once, then twice, a week where people of all nationalities would come to meet each other and practice chatting in different languages. At these meetings we would aim to pull people aside through the evening to do quick face to face surveys with them and attempt to understand:

- The demographics they fit into

- Their experience of the problem they face

- What kind of added value services they would like to see

- Where the optimal price point would be for different added, complementary services

Many of the things we learned from this research were great and built on solid foundations. But not all of it.

From the research we found that ~30% of all respondents (+150) were willing to pay for a premium service. The primary desire for the premium came from security – wanting to know the person they are meeting with is who they say they are. The research revealed that the optimal price point for this service was $3.

From the research we found that ~30% of all respondents (+150) were willing to pay for a premium service. The primary desire for the premium came from security – wanting to know the person they are meeting with is who they say they are. The research revealed that the optimal price point for this service was $3.

This was fantastic news!

It wasn’t necessarily the long term monetization strategy we were looking for, but if we gain a user we essentially gain a dollar. And we knew we could gain users. Duolingo has 200 million of them. The math worked. Our first revenue stream to drive forward our business was in place!

I won’t bore you with the details of the number of investors we met with or the hours we spent making projections off this kind of data. We assumed that with an MVP app we might not reach the 30%, but could very reasonably be hitting 10/15%.

We didn’t.

Not even close.

About 1% of active users paid for the premium. Active users made up roughly 30% of the userbase. We made $111 in that first year after Apple and Google took their cut.

Our poor research practices and methodologies completely led us astray and wasted a huge amount of our time. We had to regroup, refocus, and pivot.

But it wasn’t only our naivety in regards to research which pulled us in the wrong direction. We were far too quick to trust the research other people had done or the broad sweeping statements they made in their articles. They had done it all before, hadn’t they? They must know what they’re talking about.

A good example of this can be found in this Inc article about outdated marketing strategies:

A good example of this can be found in this Inc article about outdated marketing strategies:

2. Content-rich websites: The silliest myth of the information age is that information is so valuable that a company is doing customers a favor by providing as much of it as possible. That’s why so many business websites are chock-a-block with content.The hope behind such sites is that customers will use that information to become more educated and thereby become convinced that they must buy what’s offered. In fact, customers are overloaded with information and resent being asked to dig through more.Smart companies only provide information when it’s clear a customer wants it, and then limit that information to the minimum useful amount. Today’s most effective websites show a single screen and request one action, like signing up for a newsletter.

This is written in an authoritative tone in Inc magazine – it must be true!

But where’s the data? How does this rule apply to different businesses with different products and different target audiences? Marcus Sheridan provides a follow up:

I don’t like disagreeing with another person “just because.” But in this case, I was so fundamentally bothered by an article I recently read in Inc. about marketing trends that I simply could not allow awful, inaccurate advice to be presented as fact (news/trends) without at least giving my thoughts.

And you can read his thoughts here on Sales Lion. In short:

And if people are “too busy,” why does my average customer at River Pools read over 100 pages of the website before they buy? (And please don’t say, “They’re different.”)

Sheridan’s customers are buying an expensive product for which they feel the need to do a good amount of research. As anyone who has worked in high touch sales will tell you, landing a big sale requires time, effort, and information.

And there’s the key point: you have to be able to evaluate the material you read online before accepting it as fact. Whether that be off the cuff statements about landing pages or detailed reporting of real in-the-world research.

To fix this, the next three sections will look at elements to be wary of:

- The social phenomena of fake news and the mechanisms which drive it

- The difficulties of relying on media reporting of complex topics

- The challenges of both reporting and undertaking research at every level

So you don’t have to waste a year of your startup’s development like I did.

Before we go into the analysis, here’s a quick summary of the analysis process we’ve detailed at the end of the article!

- Is it a reliable source?

- What audience is it for?

- Are the claims universal?

- Can you test the claims?

- Is it logically sound?

- Is the argument dependent on rhetoric?

- Can you analyze the data?

- Are there existing critiques?

- Can you evaluate the methodology?

- Are YOU biased?

You are Fake News

Recent discourse has become centered around Fake News.

Recent discourse has become centered around Fake News.

The media talks about it, the President talks about it, we all talk about it.

Fake news is talked about as a political thing, but there is an argument to say that the underlying system which perpetuates it affects media of all forms. The political aspect of fake news is just the most obvious; the tip of the iceberg.

The political media is a useful microcosm from which to understand some of the systemic issues within modern digital media…

The Washington Post spoke to 38 year old Paul Horner, who has been making a living from writing fake news articles and hoaxes for the past few years. Some of his stories have been tweeted out by influential figures, not least Eric Trump during the presidential campaign.

There are a lot of layers to the interview, but one quote sticks out. Horner was asked why he thought his articles were successful and why he was able to keep going:

Honestly, people are definitely dumber. They just keep passing stuff around. Nobody fact-checks anything anymore

It isn’t just that people don’t fact check. It’s that people might produce content for an audience which they know will not fact check. So people could be easily led and money could be easily made. This has the potential to accelerate the cycle of false reportage.

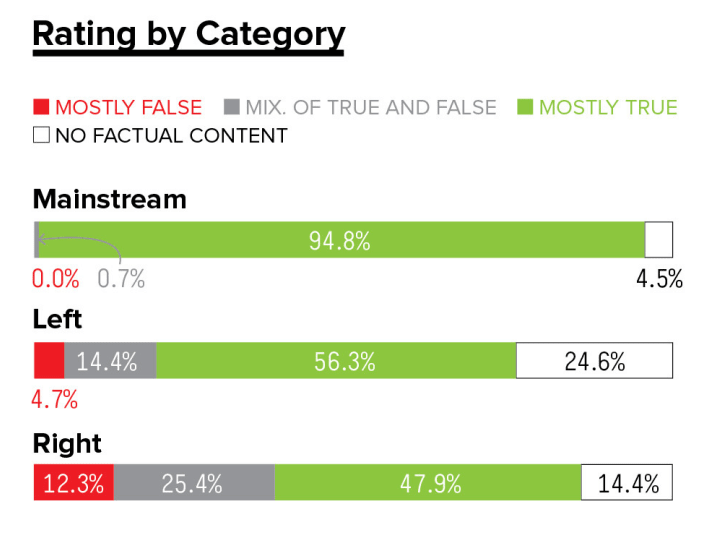

Buzzfeed News decided to gather the data to try to test these assumptions and see how widespread fake news reporting was. According to their study:

A BuzzFeed News analysis found that three big right-wing Facebook pages published false or misleading information 38% of the time during the period analyzed, and three large left-wing pages did so in nearly 20% of posts.

These figures claim to show how prevalent the dissemination of inaccurate reporting was. The graphic below aims to show how prevalent the creation of inaccurate reporting was.

I don’t want to make this a partisan issue, so let’s focus on the fact that fake news was being produced on both sides even if there are disparities. The question that leaves us with: what motivates people to create fake news?

The simple answer would be to say propaganda. People want to present their ideas as right and fabricate evidence where there is none to support their original claims. This may be true for many instances of fake news. However, it wasn’t true for Paul Horner above. He was motivated largely, it seems, by financial incentive.

One person who has written on this, from personal experience, is Ryan Holiday. He manipulated the media to push his business which sent glitter bombs to people. When he kicked off the media furore, the business didn’t really exist. Once he had garnered media attention he was able to then kick the little project into gear.

His book Trust Me I’m Lying: Confessions of a Media Manipulator provides a good insight into how stories propagate. He presents his views in this 5 minute video below which provides an overview of his critique of the media industry. Some of his points in summary:

- Media has moved from scarcity (in print) to abundance (online) which has lowered the need for high standards, and even incentivized low standards.

- Once a story appears in a small media outlet it is much more likely to be picked up by a larger outlet with less questioning.

- There is so much competition for your attention which means outlets need to be more extreme.

- Lots of reporters get paid based on traffic, which further incentivizes the creation of overly sensationalized content.

- It’s easier than ever before for marketers to manipulate the media because of these above factors, which further reduces standards.

Examples of real fake news

Let’s quickly look at two clear cut examples of fake news just to give ourselves some context.

Let’s quickly look at two clear cut examples of fake news just to give ourselves some context.

First we have the Trump quote which allegedly appeared in People magazine. In this quote Trump said that Republicans are stupid and he would run as a Republican candidate because you could tell their base anything and they would believe it. The only thing is, there is no evidence that this quote ever appeared in People magazine.

Snopes found that People magazine didn’t interview Trump that year, while CNN backed them up.

In a similar vein, a number of outlets claimed that Hillary Clinton said millennials are losers. Some of us are. But is there any evidence that Clinton said these things?

The story was reported by Infowars and Gateway Pundit, but originated on Real True News. The original version can be found here, while the current live version has been updated to gloat about how it tricked other sites. Real True News has since rebranded to promote itself as satire. Legally, this rebranding was probably a wise move.

It doesn’t need to be made up to be fake

Not every story needs to be entirely based on lies and events which didn’t happen. Fake news can also come from decontextualizing a person’s statements to suggest different meaning.

Hillary Clinton was misrepresented by a number of sites, including Gateway Pundit, Infowars, and WND claiming that she “hates everyday Americans”. This phrase was used in emails leaked by Wikileaks and fitted the image of Clinton which her opponents wanted to see.

However, as Business Insider demonstrate, the quote was grossly misrepresented. The discussion was about messaging, and Clinton hated using the phrase and concept of Everyday Americans. When the emails are viewed in context, it is very clear what is being meant.

But it isn’t just tiny outlets which report like this. The BBC Trust were critical of a BBC report which took a comment by Jeremy Corbyn and edited the video to present his answer as a response to a different question. If the high journalistic standards of the BBC can come into question, then we must be prepared to be critical.

But it isn’t just tiny outlets which report like this. The BBC Trust were critical of a BBC report which took a comment by Jeremy Corbyn and edited the video to present his answer as a response to a different question. If the high journalistic standards of the BBC can come into question, then we must be prepared to be critical.

Other times, no one is in the media is necessarily lying but the story is false and fantastical. Sometimes we believe things we otherwise wouldn’t believe because it supports our position. Like the story of the child who lied about dying and going to heaven. Is anyone really surprised to learn that wasn’t true?

Sometimes reality is simply very complex

Unfortunately, it isn’t always as simple as whether or not a 9 year old is factually accurate when describing their stroll through the pearly gates.

Different media outlets can report different angles on the same issue without anyone necessarily being wrong or even willfully misrepresentative of the facts.

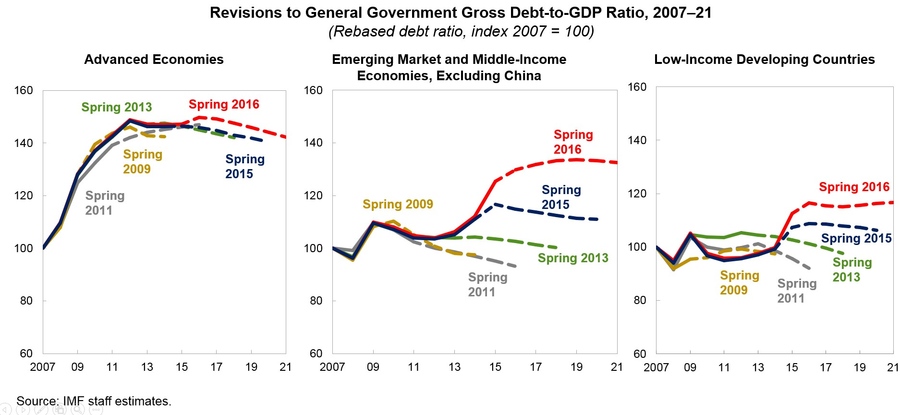

Recently, we had the International Monetary Fund fiscal policy report drama where the IMF changed tack and advocated for an increase in progressive taxation to fund growth through investment; a step away from the austerity policy which has broadly defined the West’s post-crash approach.

Recently, we had the International Monetary Fund fiscal policy report drama where the IMF changed tack and advocated for an increase in progressive taxation to fund growth through investment; a step away from the austerity policy which has broadly defined the West’s post-crash approach.

This is a complex set of issues with a massive report behind it to sift through and analyze.

As such, we had a slightly selective choice of variables on each side. The left leaning Guardian and the right leaning Telegraph both claimed this was a win for Britain’s left wing opposition leader, Jeremy Corbyn. They’re right in that the report is far closer ideologically to Corbyn than the IMF ever has been before, but Corbyn still wants tax rates above the IMF recommendations.

The right wing blog Order Order hit back, claiming that the IMF report suggested taxes 1pp lower than England’s current taxes, so it’s really a win for the Conservative party – further claiming that Britain’s effective tax rate is even higher, so the IMF report justifies further tax cuts! The Economist didn’t touch on that second point, but did reiterate Order Order’s first claim.

Richard Murphy, who has advised both Corbyn and the IMF, sees it as a victory for advocates of tax nonetheless, but chooses not to place it in the context of a win for any particular political party.

What is interesting to note is this one paragraph from the IMF’s blog announcement of their report – the blog post from which all these articles have drawn quotes.

There is no one-size-fits-all strategy. Redistribution should reflect a country’s specific circumstances, including underlying fiscal pressures, social preferences, and the government’s administrative and tax capacity. Also, taxes and transfers cannot be considered in isolation. Countries need to finance transfers, and the combination of alternative tax and transfer instruments that countries chose can have very different implications for equity.

For some reason, the linked articles chose not to run with that paragraph as their key point. Each article pulled what they wanted to show from the IMF blog post to create a narrative suited to them. In reality, the argument of whether the report favors lower or higher taxes than the UK currently has is moot. The report specifically states that tax is just one lever of many and the rates you set it at have to be appreciated in a broader context.

No one was really wrong, but I wouldn’t give anyone a gold star either.

The problem with incidents like this is that it is hard to self-investigate the issue. A normal person doesn’t have the time nor expertise to rifle through the IMF’s gargantuan Fiscal Monitor to run the numbers and figure it all out.

The problem with incidents like this is that it is hard to self-investigate the issue. A normal person doesn’t have the time nor expertise to rifle through the IMF’s gargantuan Fiscal Monitor to run the numbers and figure it all out.

I can’t say much more than try to read the source material and recognize the narratives where they’re being pushed, even if they’re “not wrong”.

Reporting evidence and stats can be very difficult

We’ve looked at the obviously fake news; whether reported with malice or with disregard for facts. We’ve also looked at the difficulties of reporting complex information with too many variables for one journalist working to a schedule to cover in its entirety. But what about everyday issues with reporting stats and other people’s research?

One phenomenon which Ryan Holiday covered in his video is known as the Woozle effect. The Woozle is a fictional Winnie the Pooh character which is imaginary in the story. Pooh and Christopher Robin find tracks which they believe belong to a Woozle. Every time they find more tracks, they attribute them to more Woozles; reinforcing their initial inaccurate interpretation of the data.

This phenomenon describes how poor data can become accepted fact simply through being reported and re-reported without reassessing the evidence upon which it is based. It is, in short, problems of people reporting on what people had reported.

Nate Silver, in his election coverage recap, finds similar issues. He describes how FiveThirtyEight had given Clinton an 70% chance of victory in the election, which he regarded as a very close affair. Other publications had then picked that figure up and used it as solid evidence that Clinton had a guaranteed victory.

Nate Silver, in his election coverage recap, finds similar issues. He describes how FiveThirtyEight had given Clinton an 70% chance of victory in the election, which he regarded as a very close affair. Other publications had then picked that figure up and used it as solid evidence that Clinton had a guaranteed victory.

The New York Times own Upshot model had predicted an 85% chance, and one of their own columnists was furious at how inaccurate they thought that figure ended up being. Silver, on the other hand, viewed that Upshot model 85% figure very differently:

After the election, for instance, The New York Times’ media columnist bashed the newspaper’s Upshot model (which had estimated Clinton’s chances at 85 percent) and others like it for projecting “a relatively easy victory for Hillary Clinton with all the certainty of a calculus solution.” That’s pretty much exactly the wrong way to describe such a forecast, since a probabilistic forecast is an expression of uncertainty. If a model gives a candidate a 15 percent chance, you’d expect that candidate to win about one election in every six or seven tries.

He goes on to say:

I don’t think we should be forgiving of innumeracy like this when it comes from prominent, experienced journalists.

The presentation of research to the public had created a narrative which was not necessarily tied to the results of the research. This shows us the problems of people reporting what researchers had reported.

Jerry Bowyer, writing for Forbes, goes even further. He claims that lots of research, both academic and commercial, often interpret their own data incorrectly by not fully understanding their analysis methods or leaving out key variables:

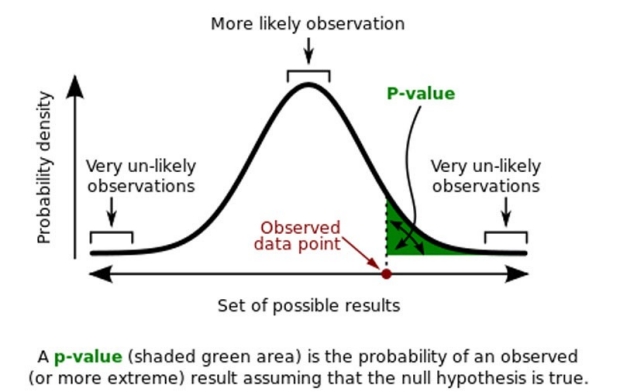

When a researcher does a study, he or she is usually dealing with two theories. One is their real theory (for example, momentum drives stock returns or this diet pill helps with weight loss) and the other is ‘the null hypothesis’ (momentum has nothing to do with stock returns or this diet pill has no effect on weight loss).

His main criticism is about a failure to properly understand and interpret P-values. In short, the p-value (probability value) helps you recognize whether something is statistically significant against the null hypothesis. If the null hypothesis is true, would your set of results still be showing greater levels of the real theory? – as presented in the quote above.

P-values are complex and Bowyer claims researchers often get them wrong, leading to false conclusions:

P-values are complex and Bowyer claims researchers often get them wrong, leading to false conclusions:

Most of these criticisms of studies are non-controversial among professional statisticians. They know that statistical significance and P values are routinely abused. People who write these studies are not usually statisticians, though. They are medical researchers or economists or financial analysts who are using (or quite often misusing) statistical theory.

I recommend reading Bowyer’s article and assessing his criticisms for yourself. Ultimately, Bowyer is describing not just the problems of how the media are reporting research, but the problems of what researchers themselves are reporting.

To go even more extreme, we can go a level deeper to find issues within research.

We’ve described problems in second hand reporting, first hand reporting, and researchers’ self reporting, but for some forms of research – where people’s answers form the data – we can find a problem with what the subjects of the research are even reporting.

This is what Roger Dooley describes as The Interpreter, a term first coined in neuroscience by Michael Gazzaniga. He says that this factor often renders traditional market research useless.

Most market researchers earn their living by asking questions – what people did, why they did it, what they might do in the future, and so on. The methodology varies – focus groups, Web surveys, interviews, etc. – but in most cases the fundamentals are similar. What would happen if these methods were applied to a person who had a diabolical module in his brain called “The Interpreter” that was constantly making up explanations for that person’s actions? These explanations would usually be logical and plausible, but in almost every case completely false. Unfortunately for market researchers, that person is all of us.

The problem is that we don’t always know why we made the decision we made. In this instance, we don’t really know why we made a purchasing decision, so a researcher who asks us what would make us make that purchasing decision again, is asking us a question to which we don’t know the answer. We’ve only rationalized the answer, so can only really guess our future behavior.

Dooley touches on some further methodological problems within market research specifically and I recommend reading his article to assess for yourself the arguments he’s posing.

The 10 step process for analyzing any article

To help give you an actionable way to quickly analyze an article, report, or study we’ve drawn from Carl Sagan, Richard Feynman, and Michael Shermer‘s lists of analytic considerations to create a ten step process suitable for our audience.

To help give you an actionable way to quickly analyze an article, report, or study we’ve drawn from Carl Sagan, Richard Feynman, and Michael Shermer‘s lists of analytic considerations to create a ten step process suitable for our audience.

- Is it a reliable source? Where is the information coming from? Is this a source you should be wary of, or one which normally produces reliable information?

- What audience is it for? Is it looking to cater to a particular group, or is it sales copy designed to overstate the significance of a finding to promote a product or service?

- Are the claims universal? A grand claim doesn’t have to be true or false to be informative. Is the author presenting specific niche information or personal experience as being universal? Can you gain value from the article without believing all the conclusions?

- Can you test the claims? What kind of information can you take from the content that you could turn into a hypothesis to test yourself? Would you be able to research further to evaluate the information?

- Is it logically sound? Is the body of the work logically consistent? Are they ignoring obvious leaps from one conclusion to the next?

- Is the argument dependent on rhetoric? Does something sound clever because it is using long winded prose (ahem) and technical jargon? How do the claims and arguments stand up if you convert them into plain English?

- Can you analyze the data? Can the data or interpretations be independently verified? Whenever we post our research at Process Street we include a link to our raw data. For our last email marketing study, we even created a microsite to display the data interactively: Inside SaaS Sales. Can you evaluate the data yourself?

- Are there existing critiques? Can you find alternative perspectives on the same topic or research? What conversations or disagreements are people already having about this topic? Can you learn more from these discussions? Hashtag trust the dialectic.

- Can you evaluate the methodology? Does the methodology appear well structured? How statistically significant are the findings and could they reflect false positives? Are the findings more easily explained by a separate unmentioned variable? Are the findings drawn from people’s responses or people’s behavior?

- Are YOU biased? Don’t just believe something because you like it. If anything that’s greater reason to be more critical.

This Michael Shermer video leans more toward science than to evaluating marketing claims, but it’s all the same game.

Wake up sheeple!

In conclusion, it appears my Masters in Philosophy was not a colossal waste of money.

I’m just kidding, I’m still paying it off now.

You don’t need a Masters or any other kind of formal training or study to be critical of the information in front of you. All you need is a process.

When it comes to political articles in your timeline, you can believe them if you want to. That’s no business of mine.

But when it comes to making business decisions or formulating strategy direction for your professional work, you need to be sure you’re making those decisions on the most solid ground available to you.

Have you had bad experiences with poor readings of data? Have you been mislead by dodgy advice? Let me know in the comments below!

Workflows

Workflows Forms

Forms Data Sets

Data Sets Pages

Pages Process AI

Process AI Automations

Automations Analytics

Analytics Apps

Apps Integrations

Integrations

Property management

Property management

Human resources

Human resources

Customer management

Customer management

Information technology

Information technology

Adam Henshall

I manage the content for Process Street and dabble in other projects inc language exchange app Idyoma on the side. Living in Sevilla in the south of Spain, my current hobby is learning Spanish! @adam_h_h on Twitter. Subscribe to my email newsletter here on Substack: Trust The Process. Or come join the conversation on Reddit at r/ProcessManagement.