You have probably used Linux today — especially if you don’t have an iPhone. And if you browsed the web today, there’s a big chance that the website you visited was served by Linux, too.

Linux is an operating system, but unlike software like Microsoft Windows and macOS, Linux was developed by a self-organized community of volunteers.

Over time, with the effort of over 10,000 developers and evolving processes to manage the scale of work, the Linux kernel has grown to over 20,000,000 lines of code in total. It forms the stable foundation for…

- Every Android phone and tablet on the planet

- 66% of the world’s servers

- 100% of the top 500 supercomputers

This technology didn’t come from an orchestrated team with a thick policy book and layers of management. It came from a few carefully-chosen and culturally-embedded policies, and a shared mission.

In this post, I look at how a technology so essential, complex and important could have been produced so effectively without traditional management structure. But first…

Why is the Linux kernel so impressive?

A kernel is the very core of an operating system. It is the orchestrator of all other software processes on your computer or smartphone, dividing up resources and providing a way for the hardware and software to communicate.

In the words of G. Pascal Zachary, an operating system’s kernel can be likened to “a chief of household staff who was so diligent that he served the family upstairs at any moment, night or day […] on call, handling every request”. The kernel keeps the machine running, no matter what it comes up against. It was an essential leap from the world where computers could run just one application at a time, to the world we know today.

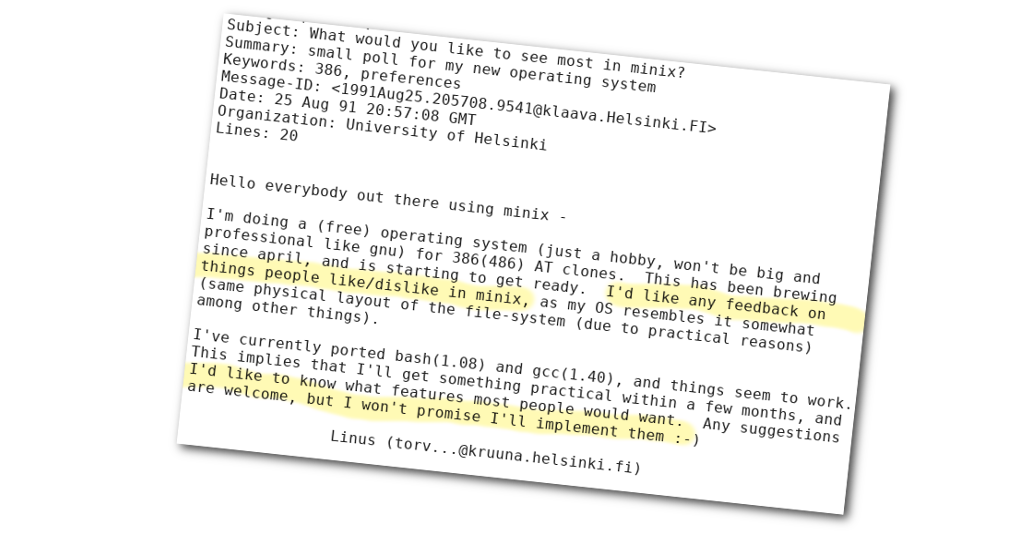

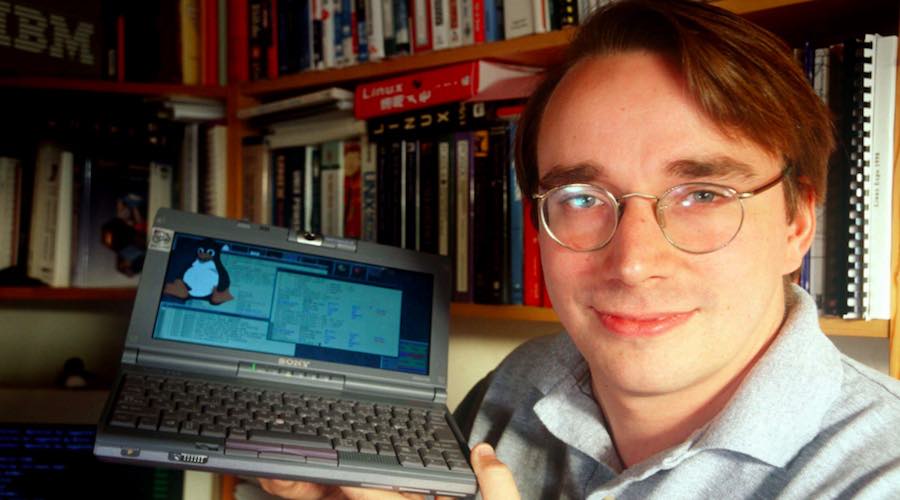

The Linux kernel, based on UNIX, was developed in the early 1990s by Linus Torvalds.

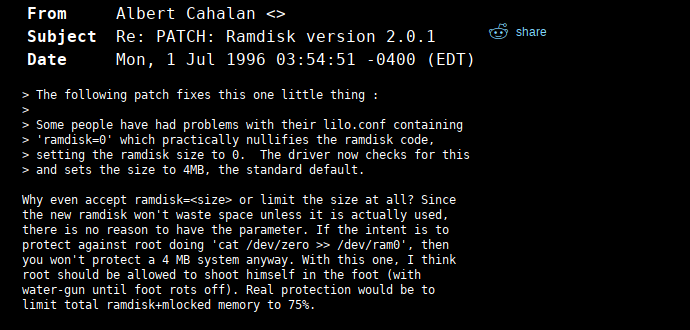

By 1991, Torvalds had released the first version — just 10,000 lines of code — and sparked excitement in the software development community with the humble email announcement seen above.

Empowered by the collaborative power of internet for the first time in history, developers contributed their own coding skills and testing time for free as the kernel user base exploded in size. If they were willing to experiment, users could try to cobble together a patch if they found something was broken, and discuss how to improve the software.

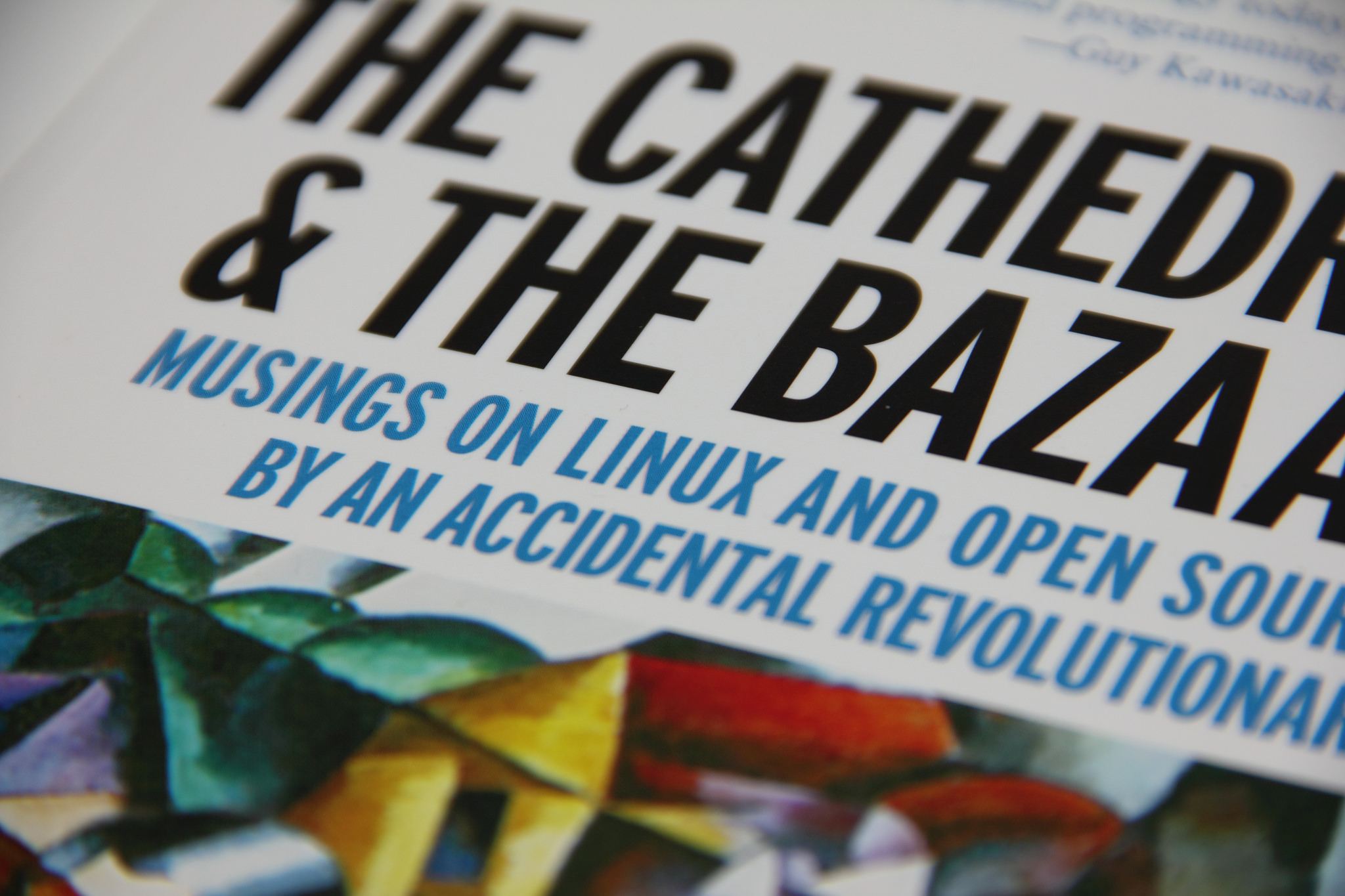

As Eric S. Raymond looks at in his book on early open source software, The Cathedral and The Bazaar, Linus Torvalds’ management of kernel developers grew to be efficient, self-organizing, and more than capable of producing arguably one of the most complex and widely-used pieces of software on the planet.

In this piece, I look at the processes and policies that have emerged as necessary support for the Linux kernel project as it has evolved over the years.

How, without formal process, as a 21-year old computer science student, did Torvalds guide the kernel project to the heights it reached…

…And what’s so hard about managing developers, anyway?

According to research by Geneca, over 75% of business and IT executives believe their software projects are bound to fail. The difficulty of producing reliable software and managing those who do it has spawned countless management books, productivity studies, and leadership frameworks.

“The sheer complexity of software means it is impossible to test every path”, writes Kynetix CEO Paul Smyth on Finextra. “A software application is like an iceberg – 90% of it is not visible. The major complexity in the application lies below the waterline”.

Any software project of significant size, whether that’s a CRM or an operating system like Microsoft Windows, is too large to fit into any one person’s head. It requires the shared knowledge and collaboration of many contributors. This means that developers work on specialized parts of the application while needing to understand how their work effects the whole.

“No one mind can comprehend it all”, remarked the manager of a team of 250 Microsoft software developers while they were building an operating system from scratch, according to G. Zachary in Show stoppers!, a book which tells the story of a team of Microsoft software developers racing to complete Windows NT in 1993.

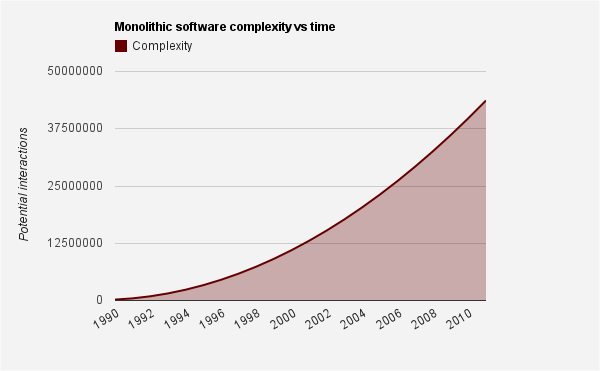

The larger the project, the slower changes can be implemented. Research from Steve McConnell proves this, finding that code is written at a 5-10x slower rate on projects of over 10,000,000 lines. Furthermore, a study of Microsoft’s development practices revealed that there are roughly 10-20 bugs for each 1,000 lines of code.

Despite the size of the project and number of contributors, development of the Linux kernel happens rapidly and its large, enthusiastic userbase catch bugs and write patches to quickly ship improvements.

In its early development days – around 1991 – it wasn’t unheard of for Torvalds to release more than one new kernel per day. Now, in a more mature stage and depended upon by 80% of smartphones and the majority of internet servers, the desired release period is 8 to 12 weeks. Each release, the kernel sees an average of 10,000 patches from 2,000 developers – all wrestling with over 20,000,000 lines of code.

What are the management techniques and processes required to orchestrate this level of collaboration and productivity? Well, they weren’t written up by a department head or business book author. They developed organically, with guidance from the project’s “benevolent dictator“, Linus Torvalds.

Even in its nascent form, the Linux kernel was being collaborated on by hundreds of volunteers who submitted their work directly to Torvalds for review. How did a bad-tempered, anti-social hacker manage disputes, contributions, and communication between thousands of developers for almost two decades?

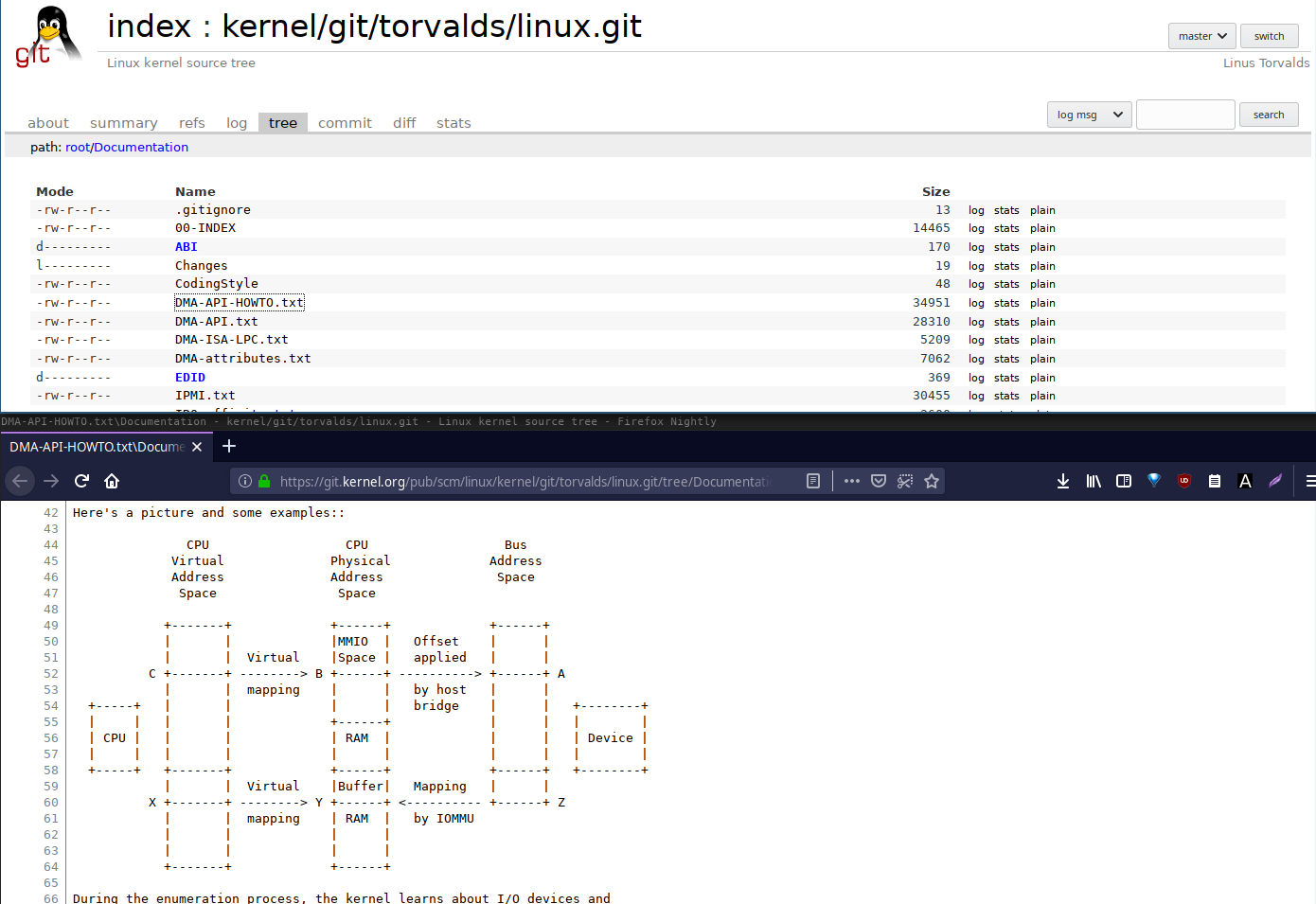

How policy, process and 15,000,000 lines of code are documented

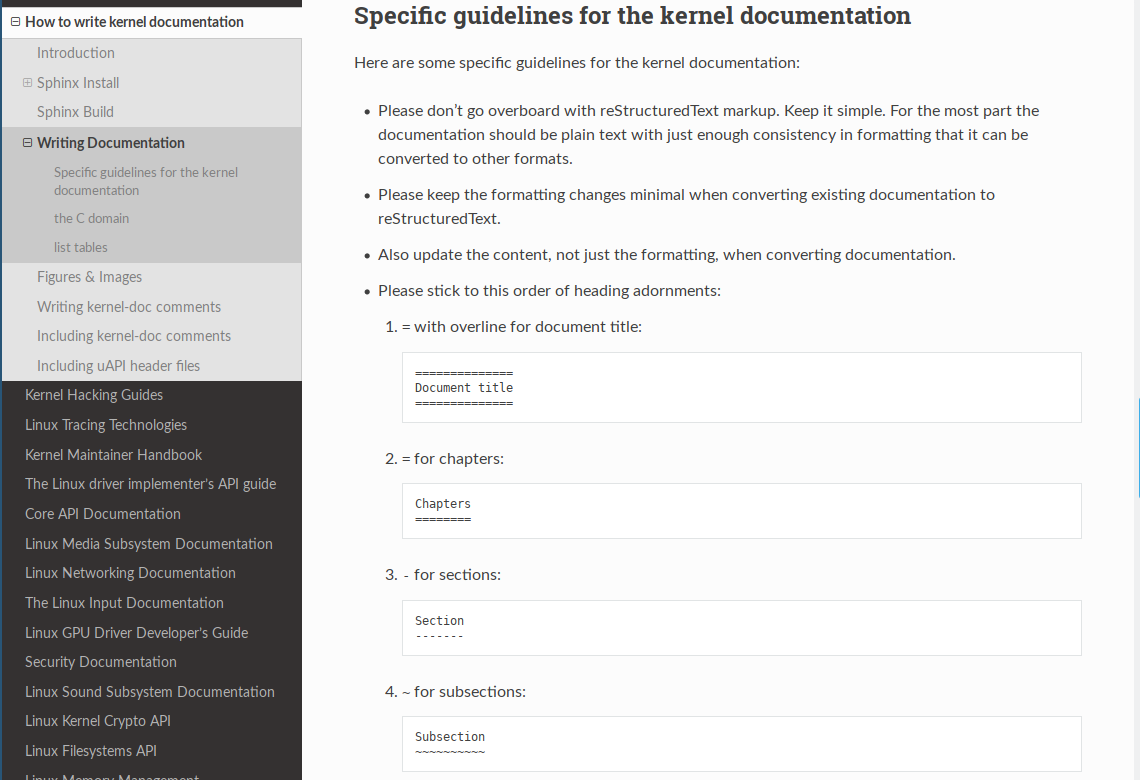

Unlike a traditional organization, new kernel developers aren’t strictly ‘onboarded’ into the community but instead expected to have fully read and understood the introductory documentation. The kernel project’s focus on documentation was a necessity both because of the technical complexity and the sheer number of developers.

Numerous FAQs, how-tos and getting started guides exist in the kernel’s documentation repository, and even more in wikis created and maintained by kernel developers around the world.

The way documentation is written and improves reflects the way the kernel is developed; collaboratively, openly, and incrementally.

By leveraging the eyeballs and specializations of a huge group of people, the documentation can be created, tested and proofread far more efficiently than if done by a smaller, dedicated team. To bend Linus’ Law into terms a content editor might better understand: with enough eyeballs, all typos are obvious.

As well as material for technical support, the kernel development community has created a library of process documentation designed to smooth the human side of collaboration.

On the index page of “A guide to the Kernel Development Process”, a paragraph reads:

“The purpose of this document is to help developers (and their managers) work with the development community with a minimum of frustration. It is an attempt to document how this community works in a way which is accessible to those who are not intimately familiar with Linux kernel development (or, indeed, free software development in general).”

Noone is born with an innate knowledge of the git version control system, or how to submit a patch to a mailing list. That’s why the development process must be documented — explaining the same basic information to one person at a time doesn’t scale!

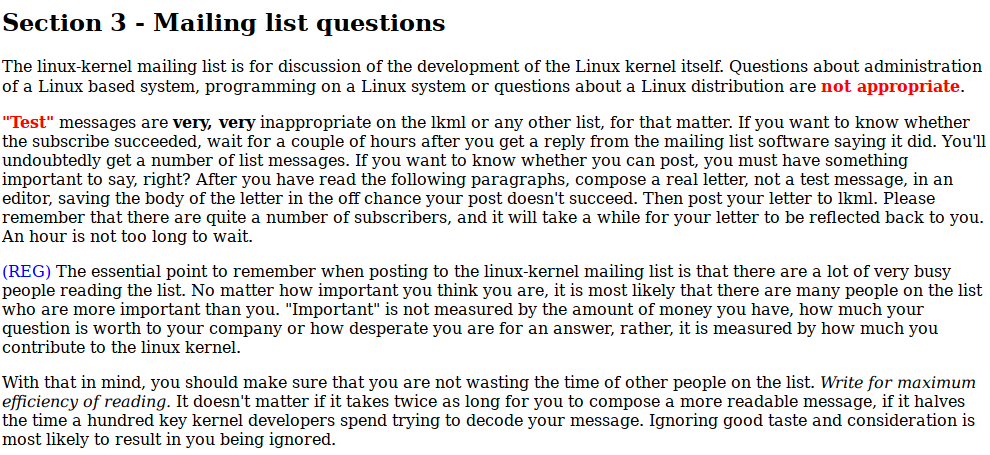

How communication between 1000s of developers is managed

Conversations between developers happened out in the open, in the kernel development mailing lists. To this day, email is still the preferred tool because of its simplicity. These mailing lists were archived and organized, making up a body of living documentation and history.

The effect of communicating in public has an effect similar to the benefits of using a tool like Slack today — any information is made safe and searchable, instead of evaporating. In order to manage such a firehose of information, however, the kernel project developed communication policy and distributed it as part of the documentation.

Communication and collaboration on such a scale was not possible before the internet, so early projects such as this needed to write and distribute quick and effective solutions for community management, etiquette, and code presentation problems.

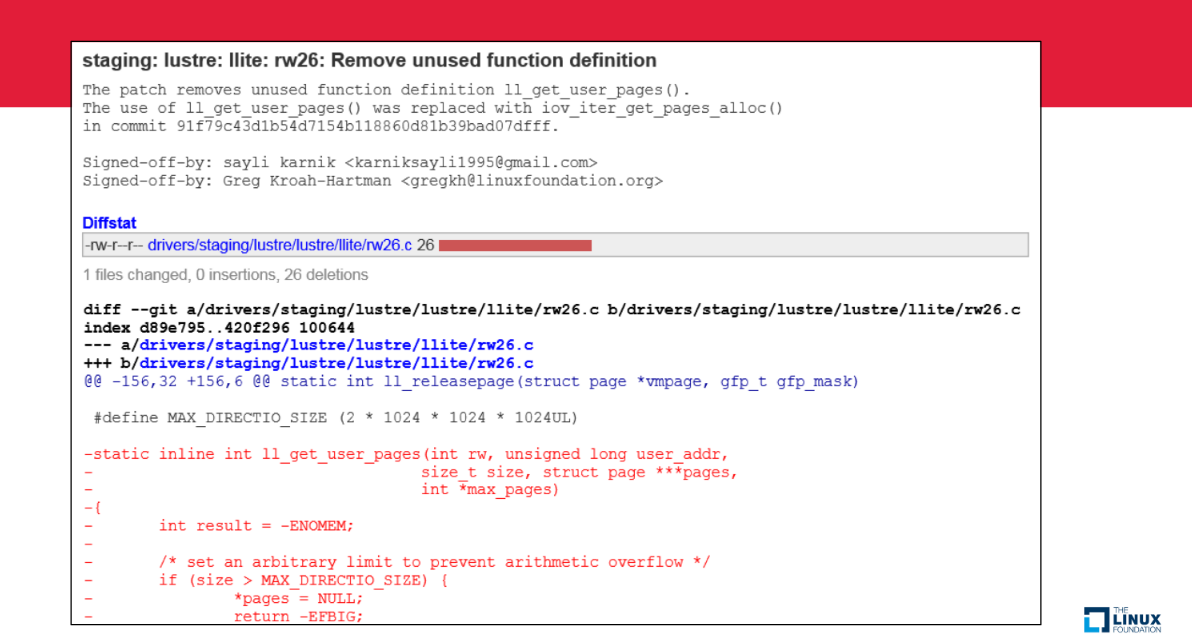

The kernel documentation includes rules for submitting patches, so it makes it easier for others to review, edit, and test contributed code. This means patches must be emailed in plain text, not attached. Patches must be kept to once per email, and obey specific coding style guidelines:

This rigid standardization is absolutely necessary for a project as large as the kernel, as it is for projects of any size. It helps reduce errors in a space where a single error can have a ripple effect that produces unstable code in the future, or wastes the time of many testers and developers.

How critical decisions are (not) made

To quote Torvalds:

“The name of the game is to avoid having to make a decision. In particular, if somebody tells you “choose (a) or (b), we really need you to decide on this”, you’re in trouble as a manager. The people you manage had better know the details better than you, so if they come to you for a technical decision, you’re screwed. You’re clearly not competent to make that decision for them.” – Linux Kernel Development Documentation

As well as avoiding a managerial hierarchy, the Linux project had clear rules and documentation that helped make decisions without the need for discussion, debate, or (many) mailing list flame wars. A look at the archives will show you that the flame war part isn’t always possible, but what is possible is to create policy that negates the burden of decision.

“Thus the key to avoiding big decisions becomes to just avoiding to do things that can’t be undone. Don’t get ushered into a corner from which you cannot escape. A cornered rat may be dangerous – a cornered manager is just pitiful.”

Show Stoppers! reveals that Bill Gates’ philosophy is similar. He “admired programmers and invariably put them in charge of projects, where they could both manage and program […] to avoid a situation in which professional managers, with either no programming experience or out-of-date knowledge, held sway”.

How contributors are oriented around a strong common goal

In the case of Linux, as it is with many popular open source projects, the kernel didn’t emerge having being collaboratively designed by a large group of contributors; rather, it was improved upon incrementally without making decisions that destabilize the strong base of work so far. This ties in well with Torvalds’ philosophy on decision-making in management, but also with a philosophy embedded into the code itself.

Linux is based on UNIX, which is an earlier operating system designed around a set of zen-like principles. As explicitly stated in the UNIX Philosophy:

“Design and build software, even operating systems, to be tried early, ideally within weeks. Don’t hesitate to throw away the clumsy parts and rebuild them.”

Both Torvalds and Raymond (who sought to replicate the success of Torvalds’ community-building) found that releasing early and often helped to rally contributors around an exciting, growing project that they can see a future in. Raymond boiled it down to two key things that a project cannot fail to do when released into the world:

- Run

- Convince potential co-developers (users) that the project can be evolved into something great soon

It’s with these same principles many of today’s startups launch — Process Street included:

Above is Process Street in 2014. Sign up for an account to see how far we’ve come.

Is this a repeatable process?

On the surface, the sudden coming-together of one of the most intricate human creations seems like alchemy. But, by picking apart the components it is easier to see an underlying process. At the time of writing The Cathedral and The Bazaar, Eric S. Raymond was simultaneously running the development of an open source email client. By open-sourcing it, Raymond was attempting to replicate the community involvement and ultimate success of the kernel project.

Many of the basic tenets of the Bazaar model, as he coined it, will be immediately familiar to anyone in the startup world:

- Every good work of software starts by scratching a developer’s personal itch.

- Treating your users as co-developers is your least-hassle route to rapid code improvement and effective debugging.

- Release early. Release often. And listen to your customers.

- Given a large enough beta-tester and co-developer base, almost every problem will be characterized quickly and the fix obvious to someone.

- If you treat your beta-testers as if they’re your most valuable resource, they will respond by becoming your most valuable resource.

- The next best thing to having good ideas is recognizing good ideas from your users. Sometimes the latter is better.

- Often, the most striking and innovative solutions come from realizing that your concept of the problem was wrong.

- To solve an interesting problem, start by finding a problem that is interesting to you.

- Provided the development coordinator has a communications medium at least as good as the Internet, and knows how to lead without coercion, many heads are inevitably better than one.

In short, it is a repeatable process. And one that has been successfully adopted from the world of open source into the startup mentality. It manifests in agile, lean, six sigma, and the attitudes and policy startups today have developed. While it’s not often mentioned in the same conversation, the methodology that evolved around early operating systems shares a lot with today’s idea of iterating on a minimum viable product.

Workflows

Workflows Forms

Forms Data Sets

Data Sets Pages

Pages Process AI

Process AI Automations

Automations Analytics

Analytics Apps

Apps Integrations

Integrations

Property management

Property management

Human resources

Human resources

Customer management

Customer management

Information technology

Information technology

Benjamin Brandall

Benjamin Brandall is a content marketer at Process Street.