Fundraising is not easy. It can be a long and hard journey.

But whatever your approach to fundraising is, the first step is usually getting a list of target investors to approach.

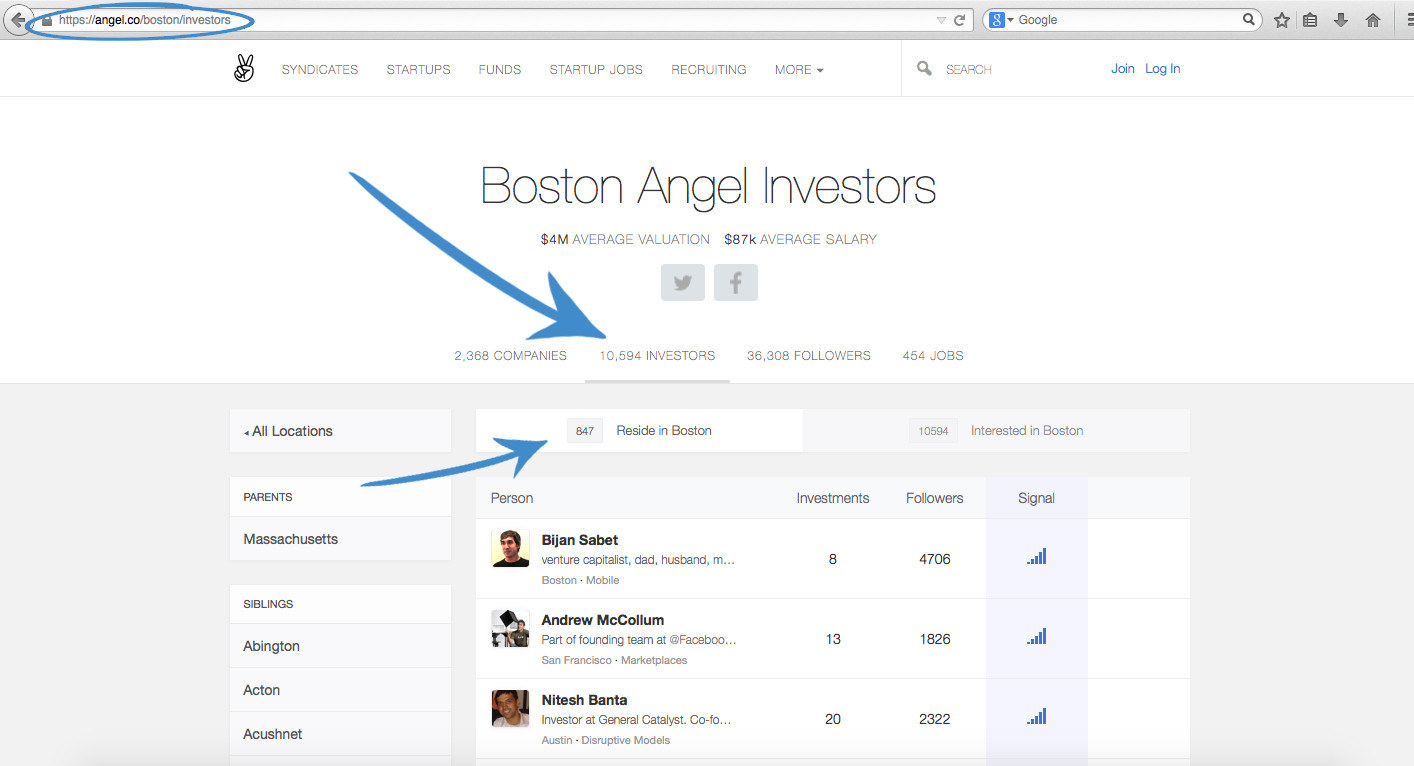

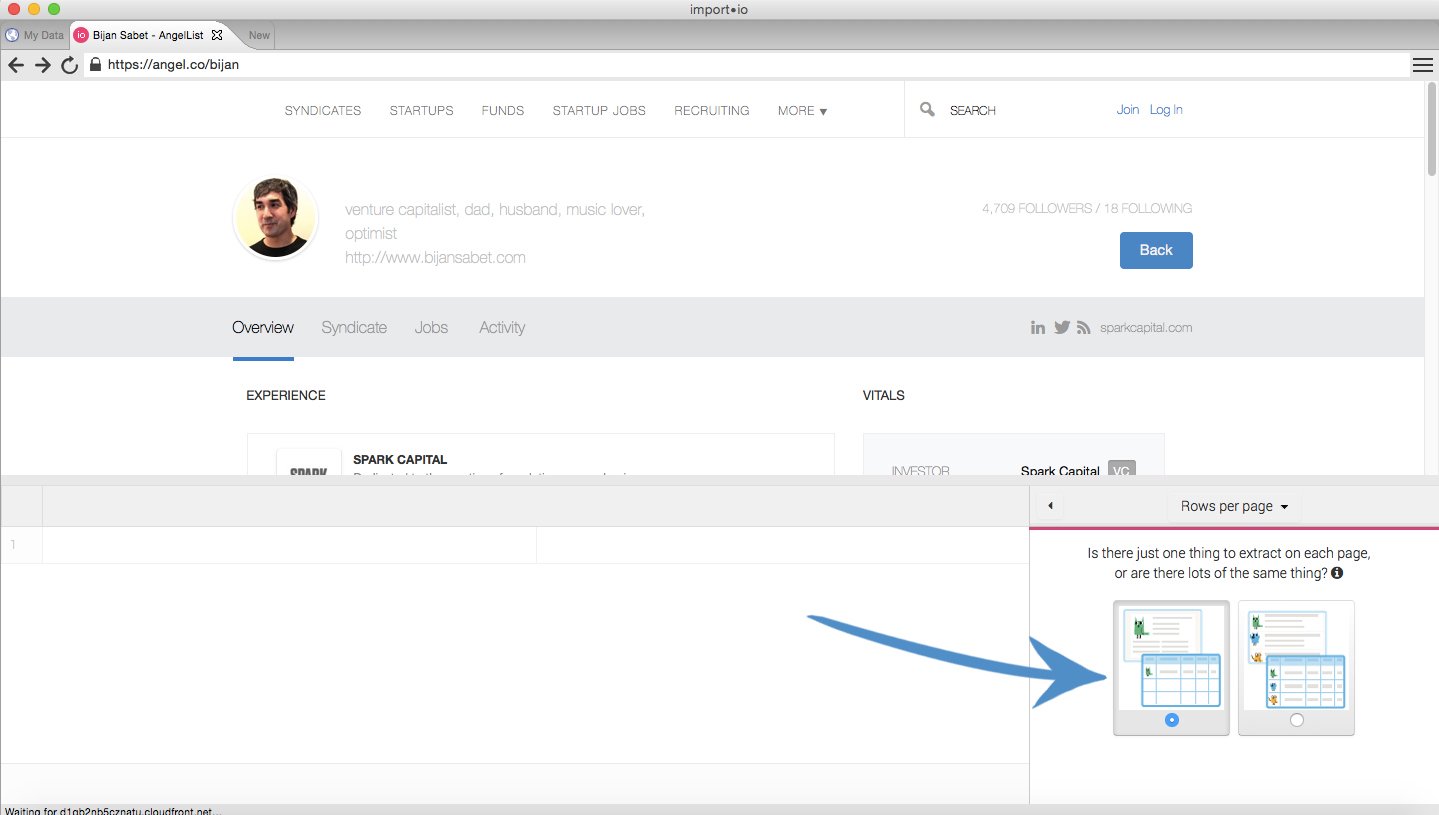

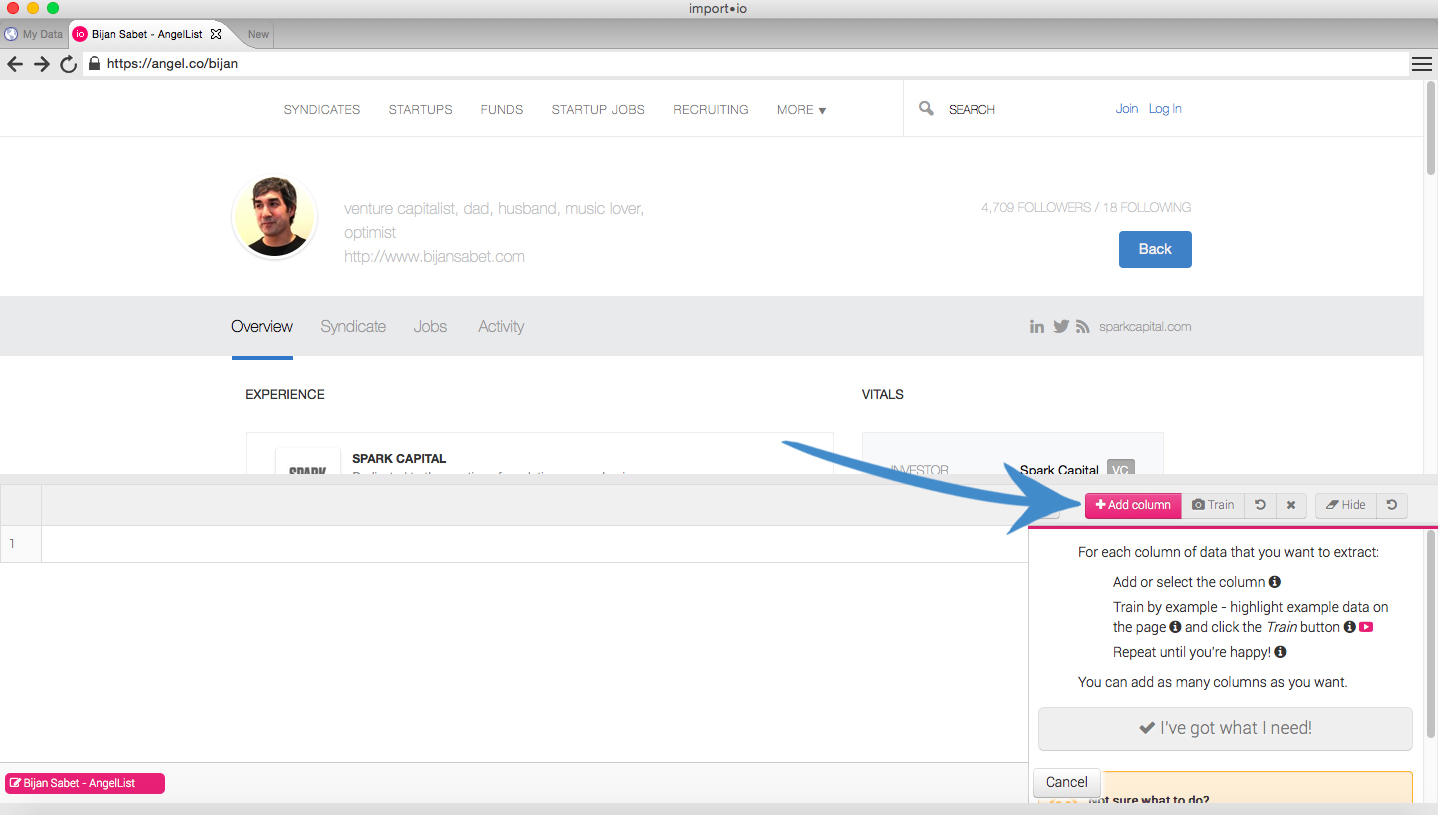

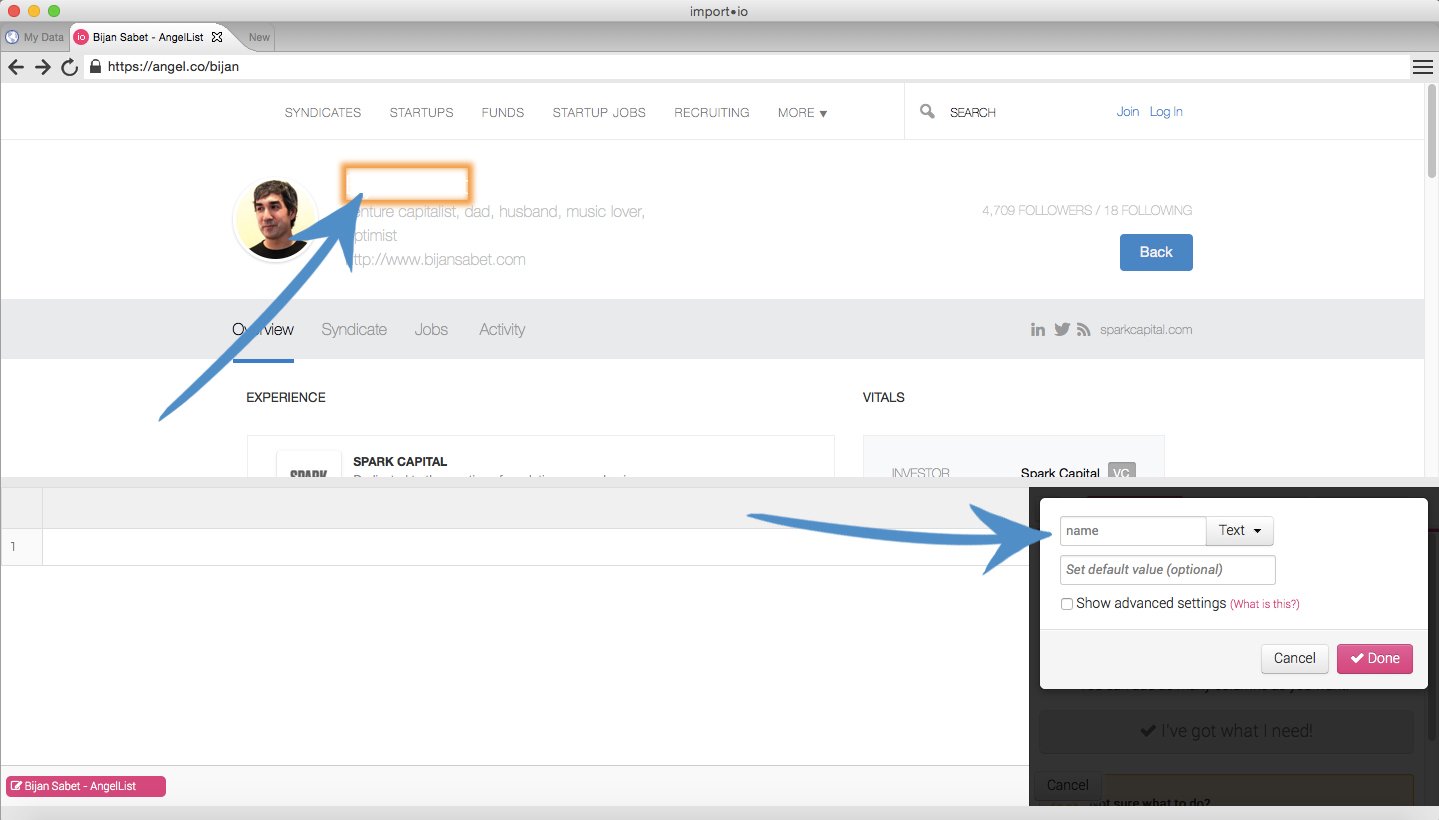

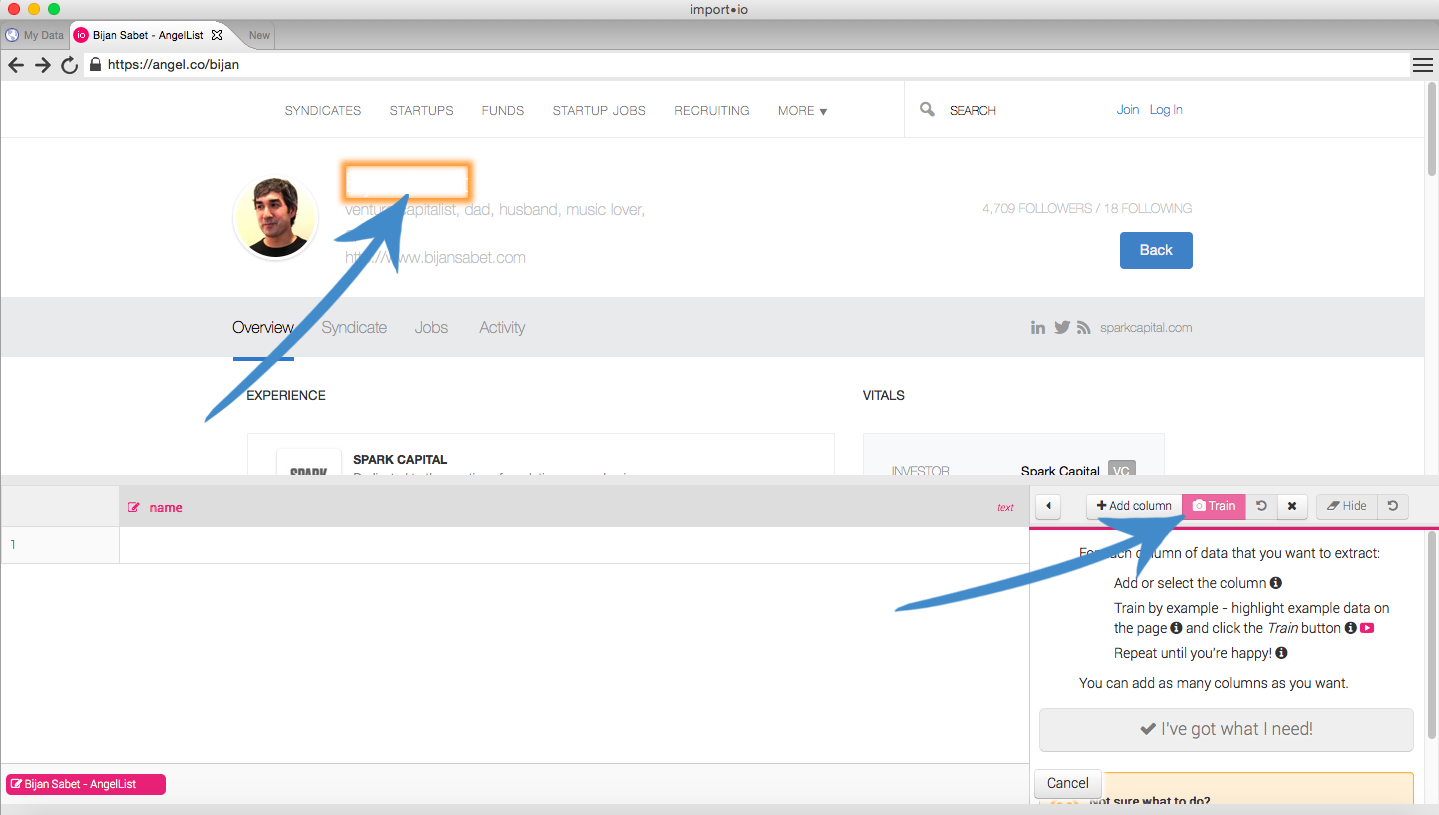

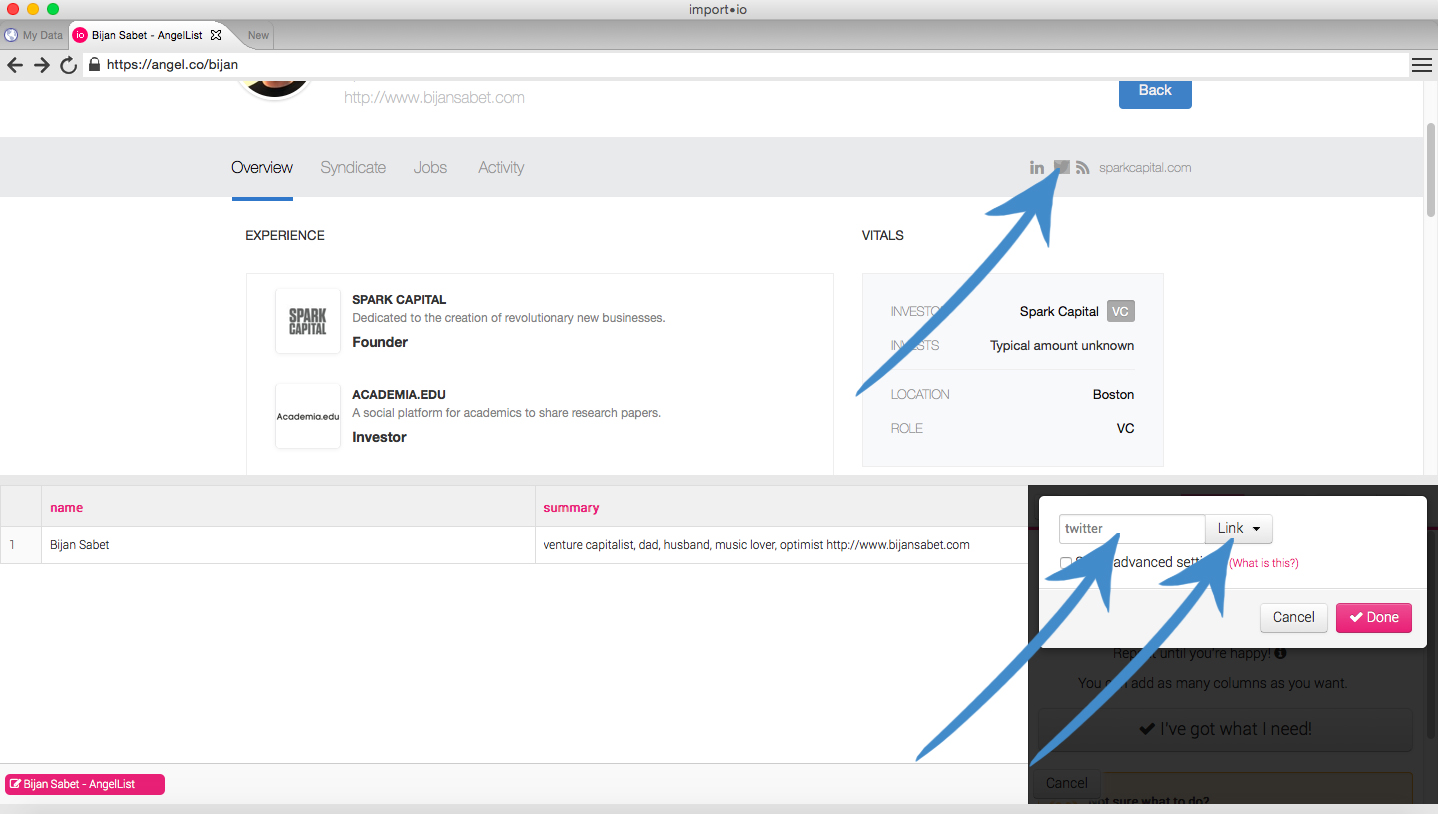

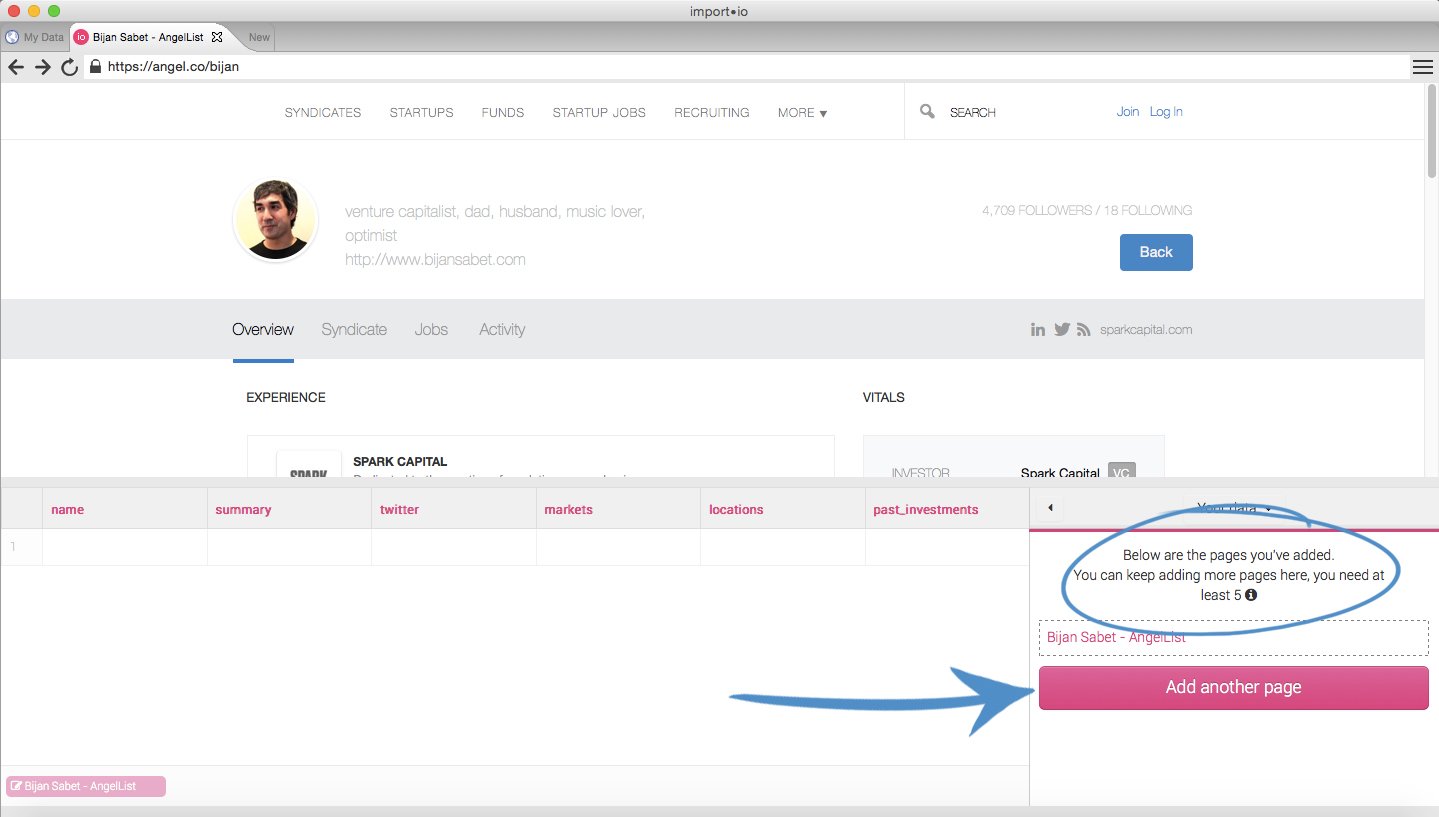

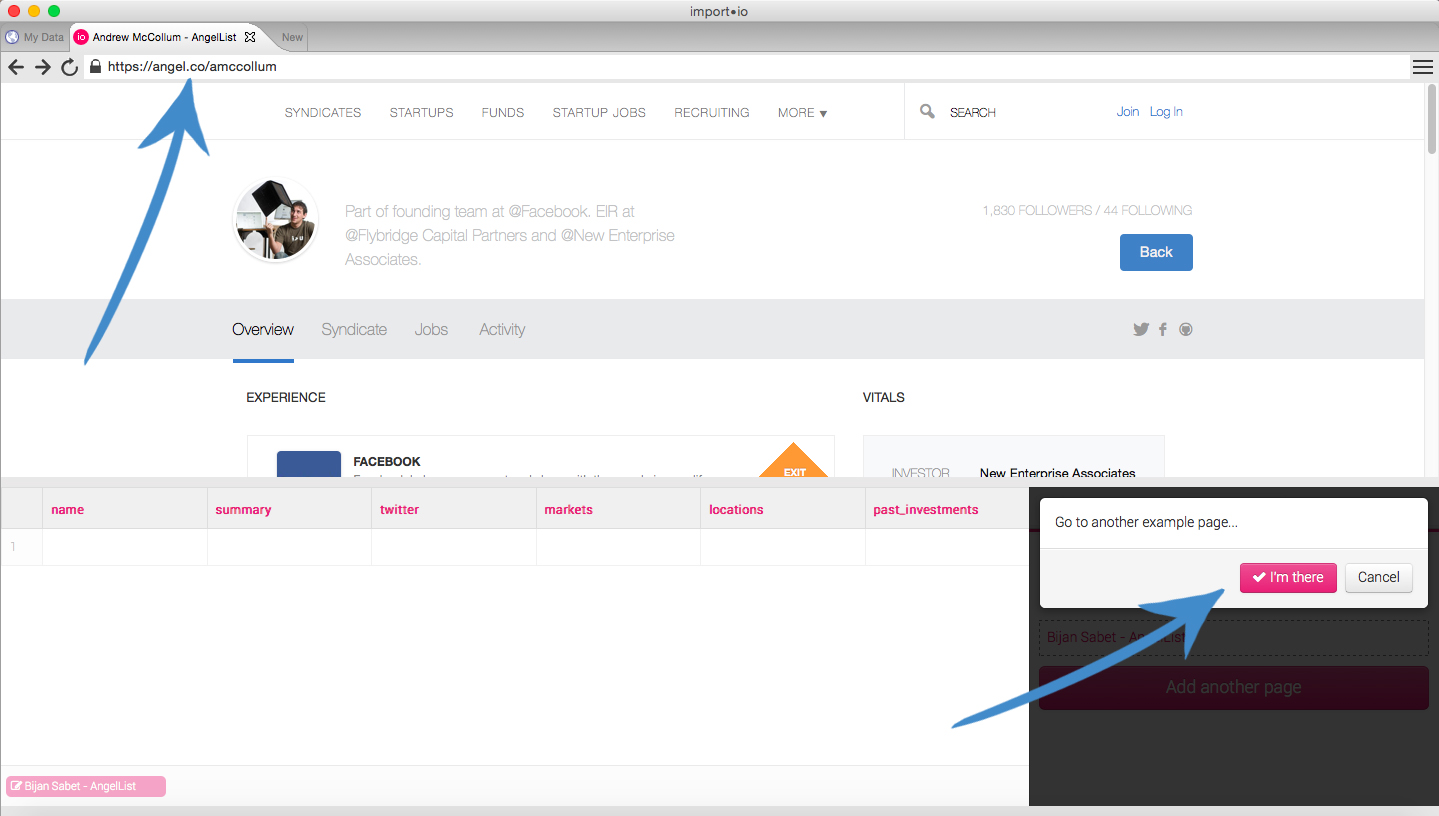

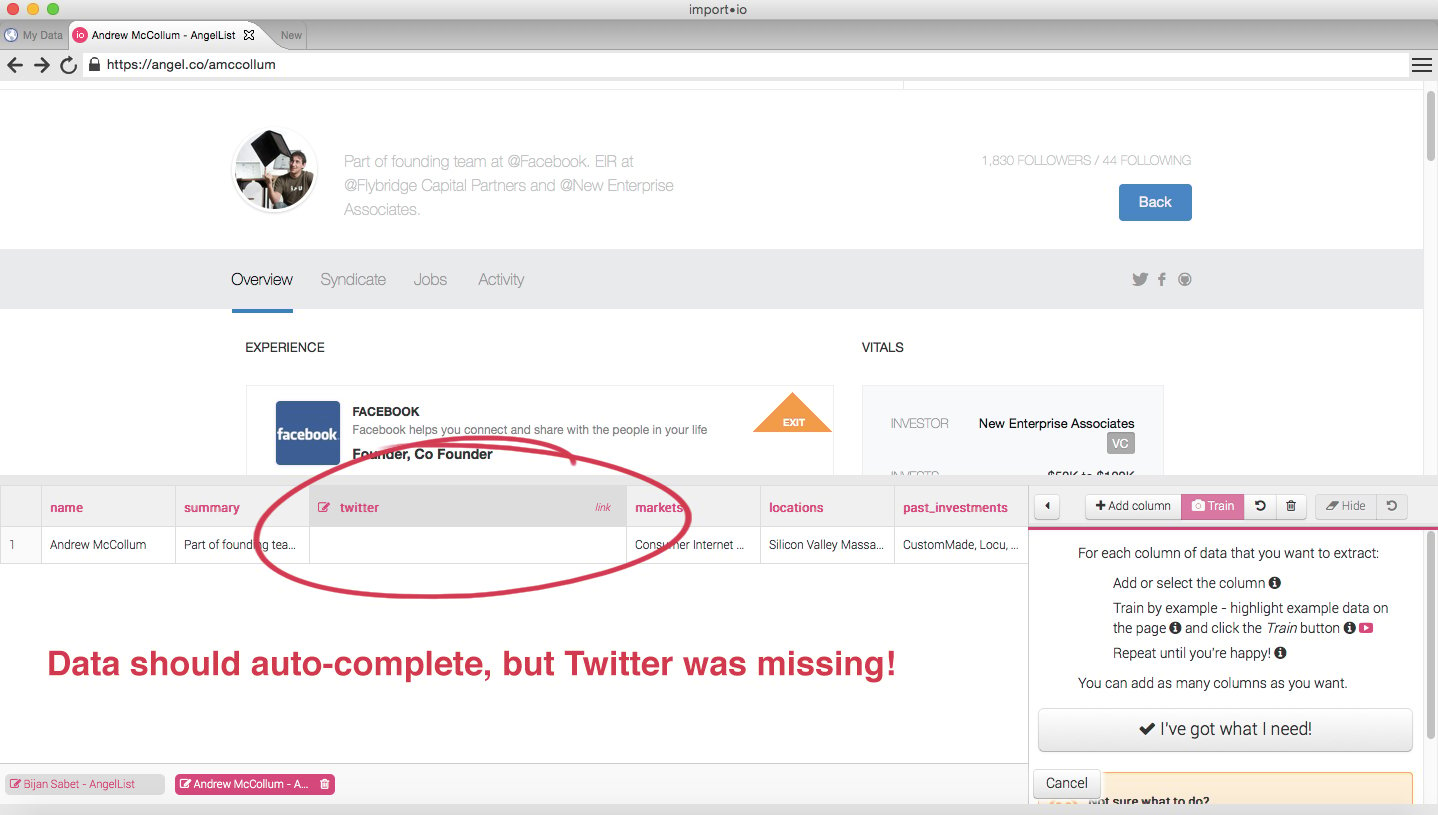

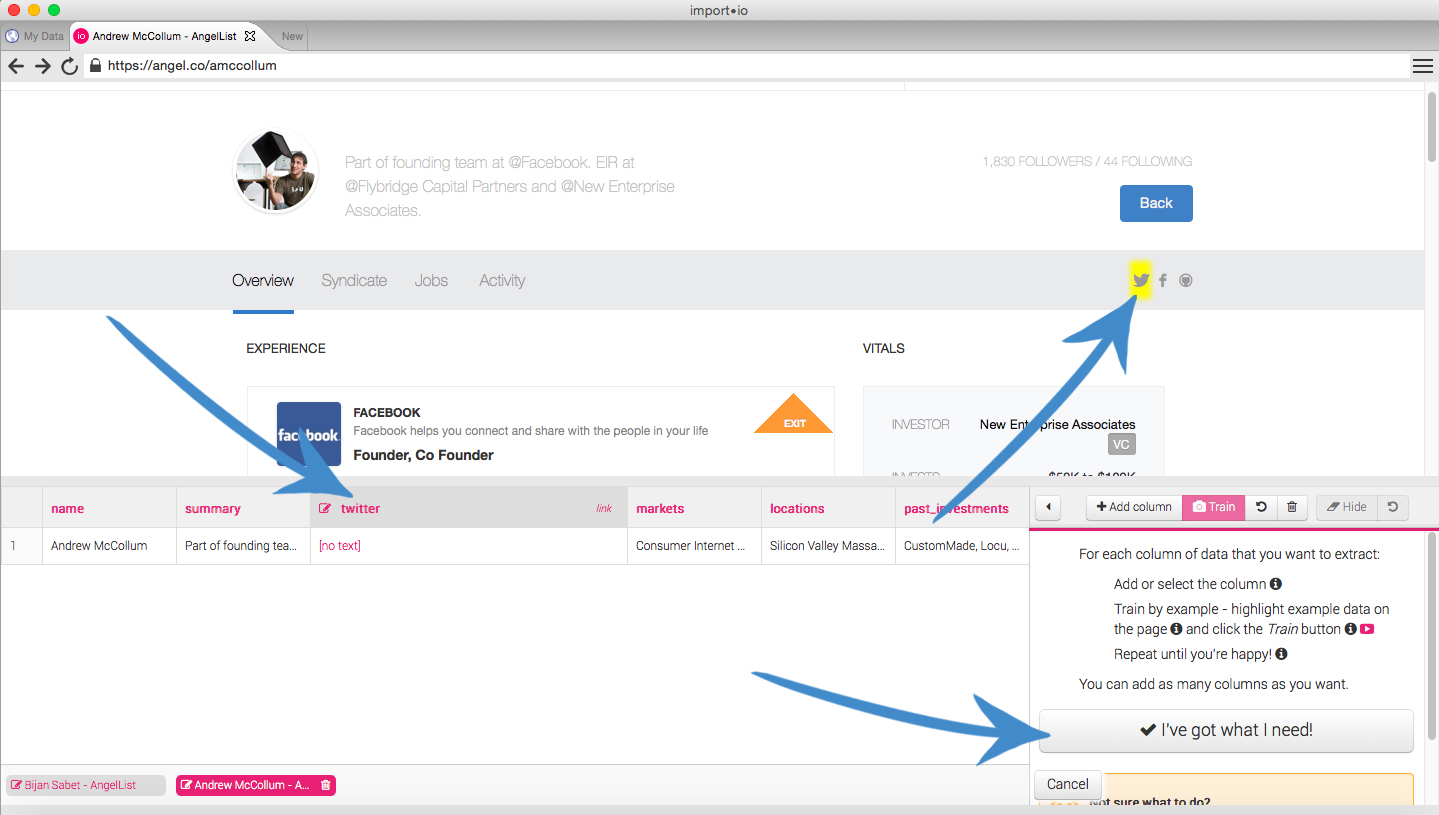

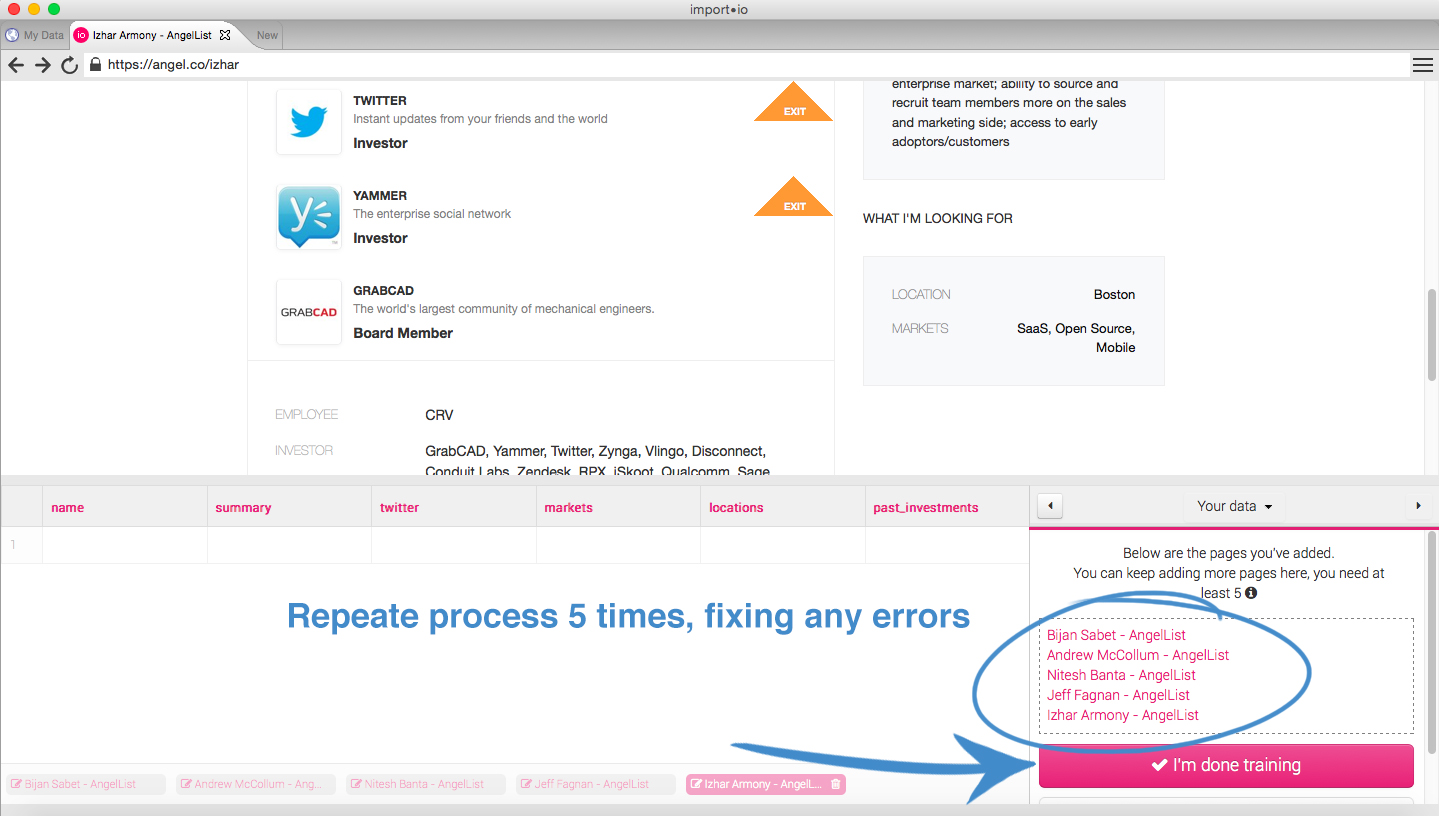

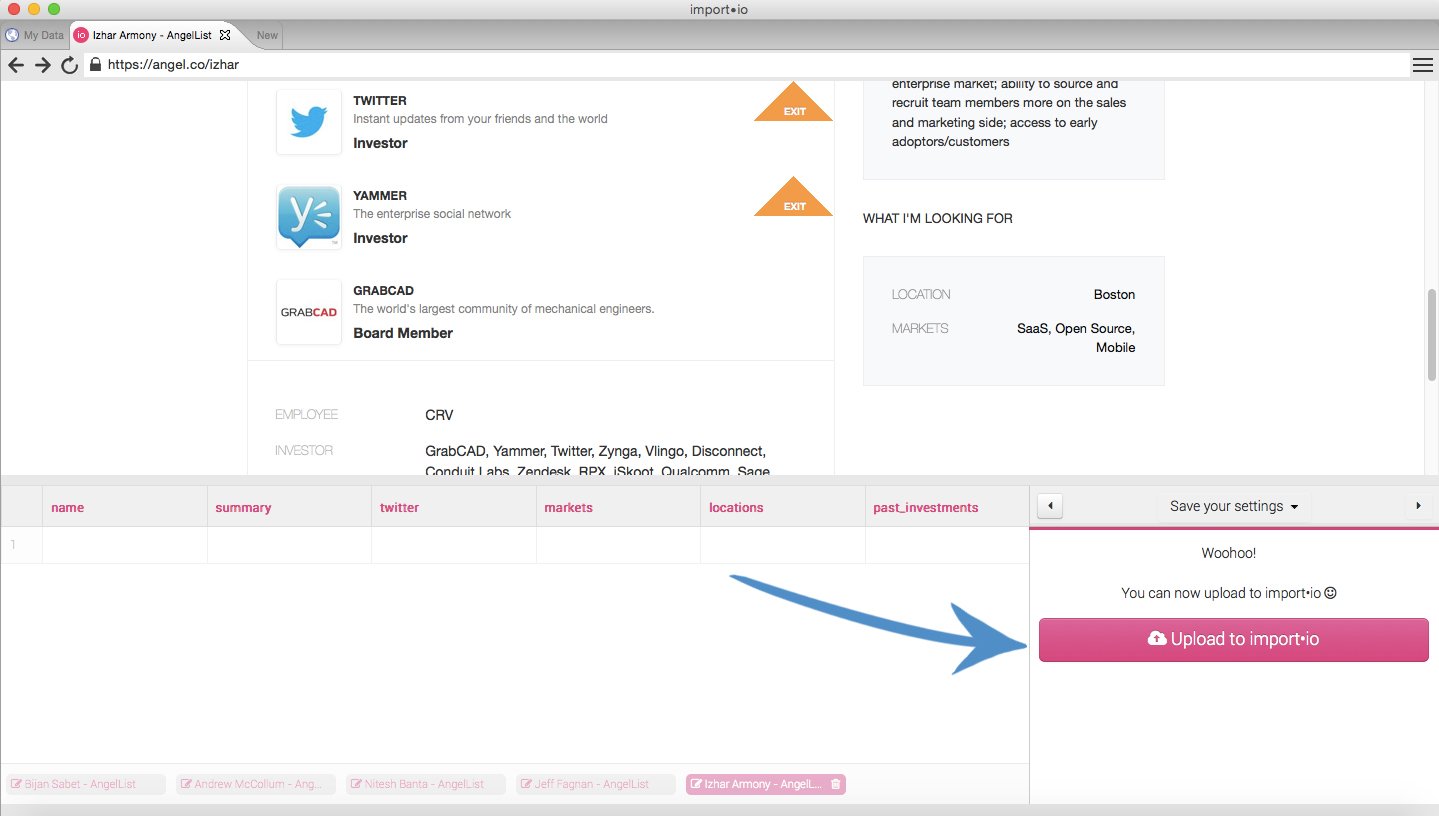

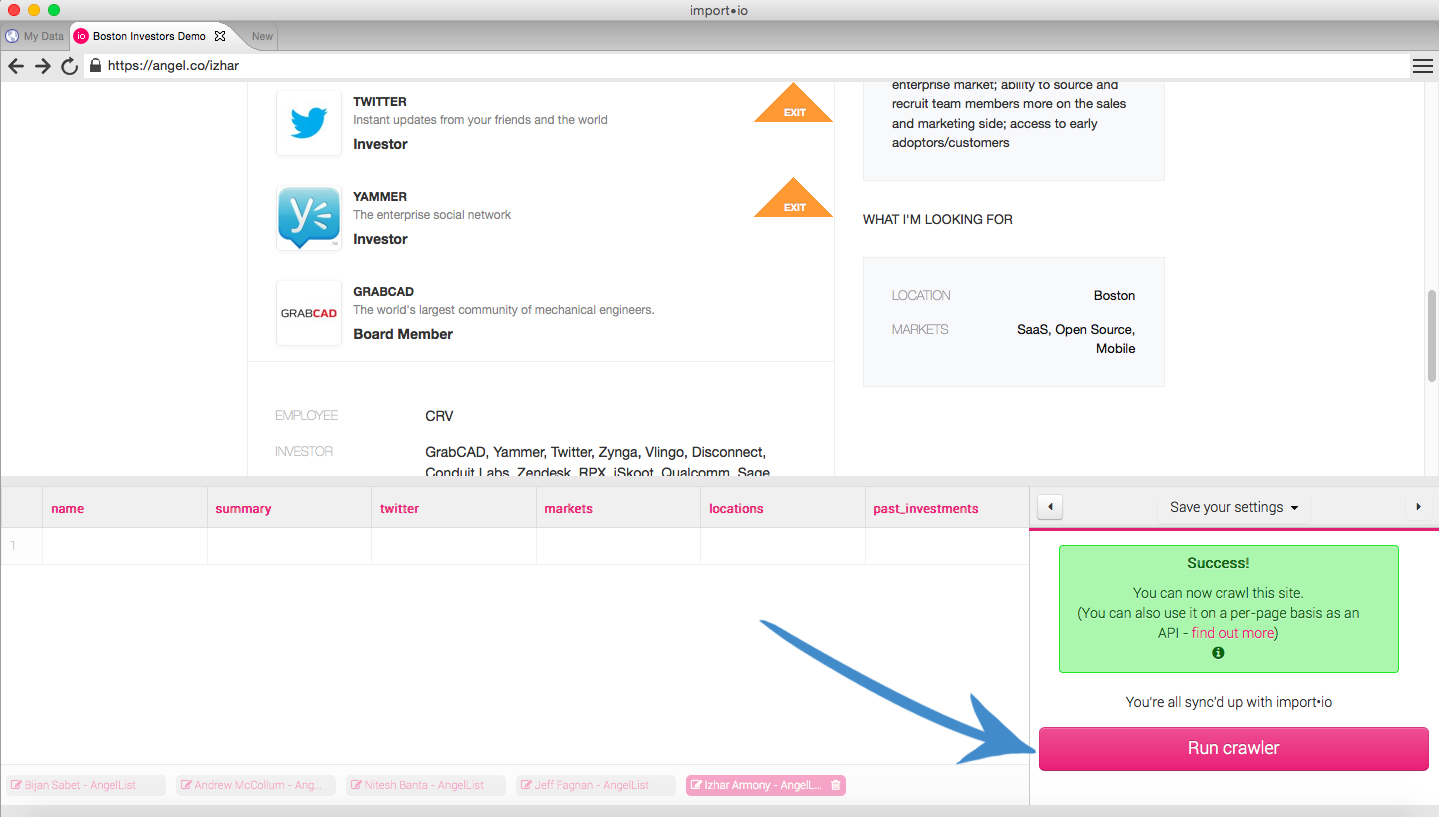

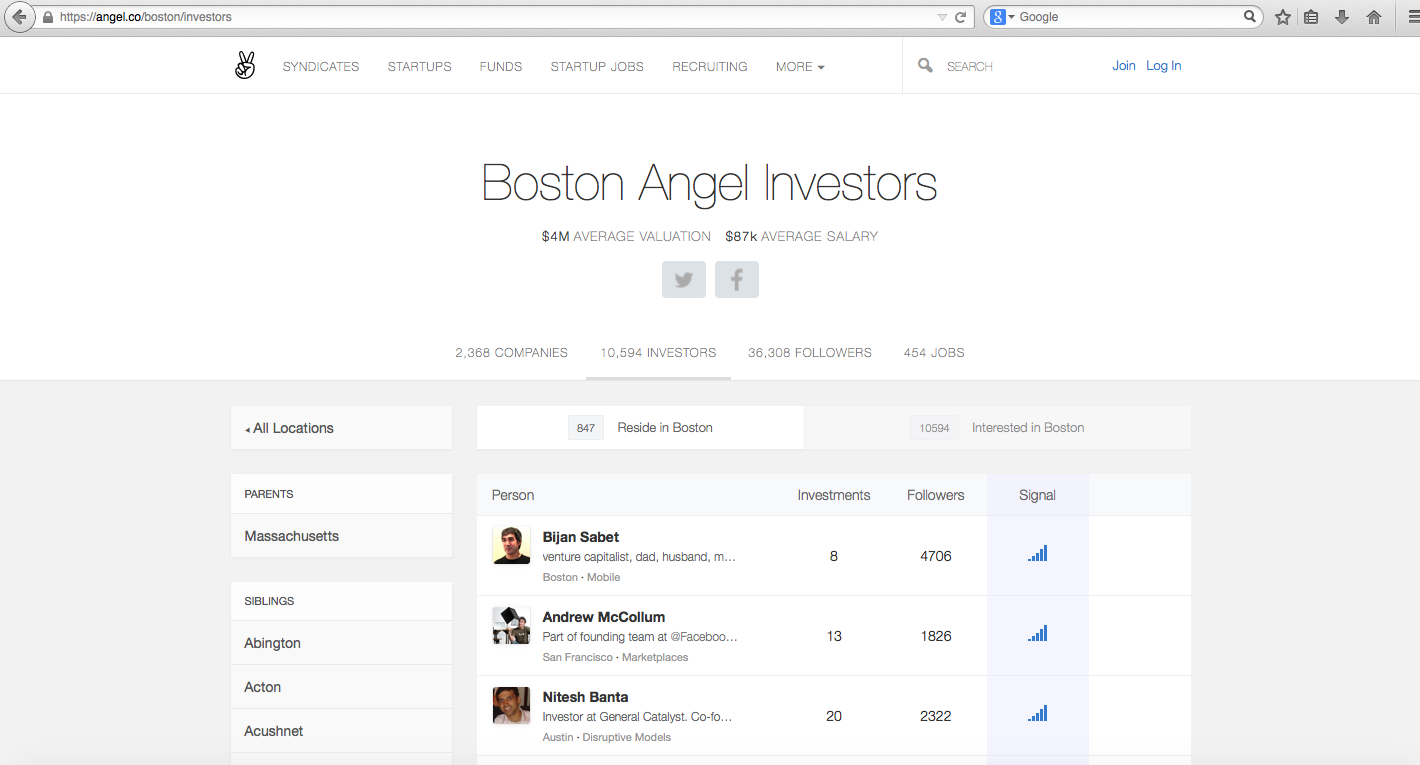

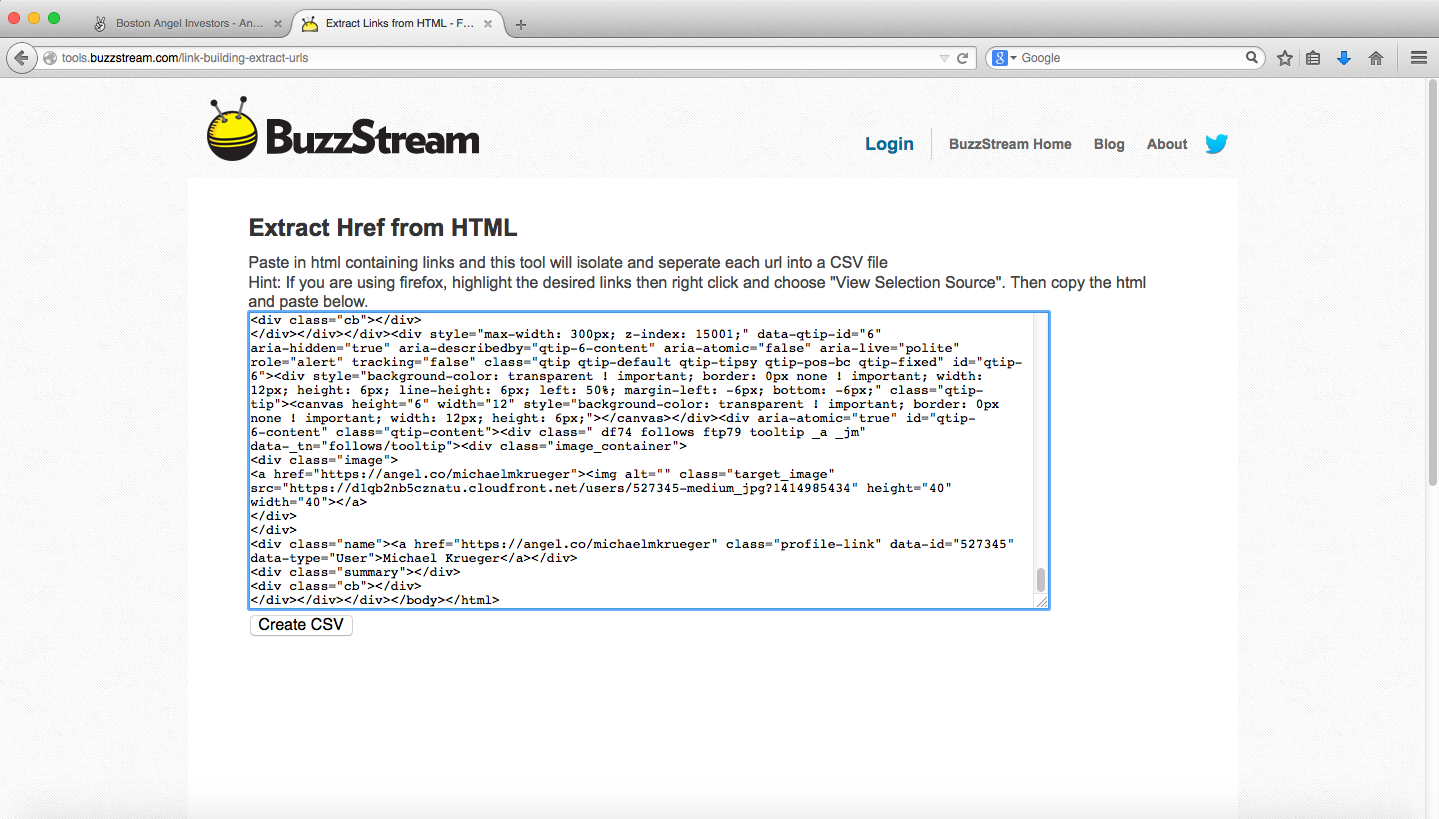

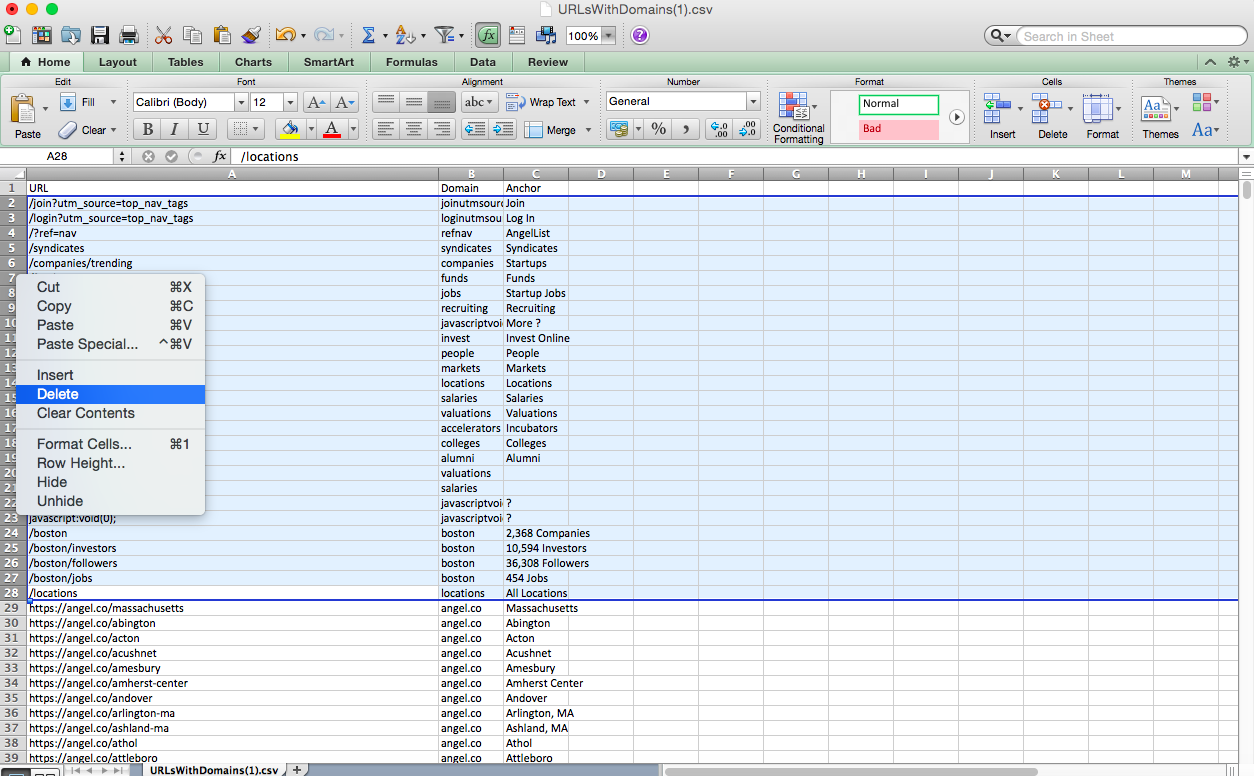

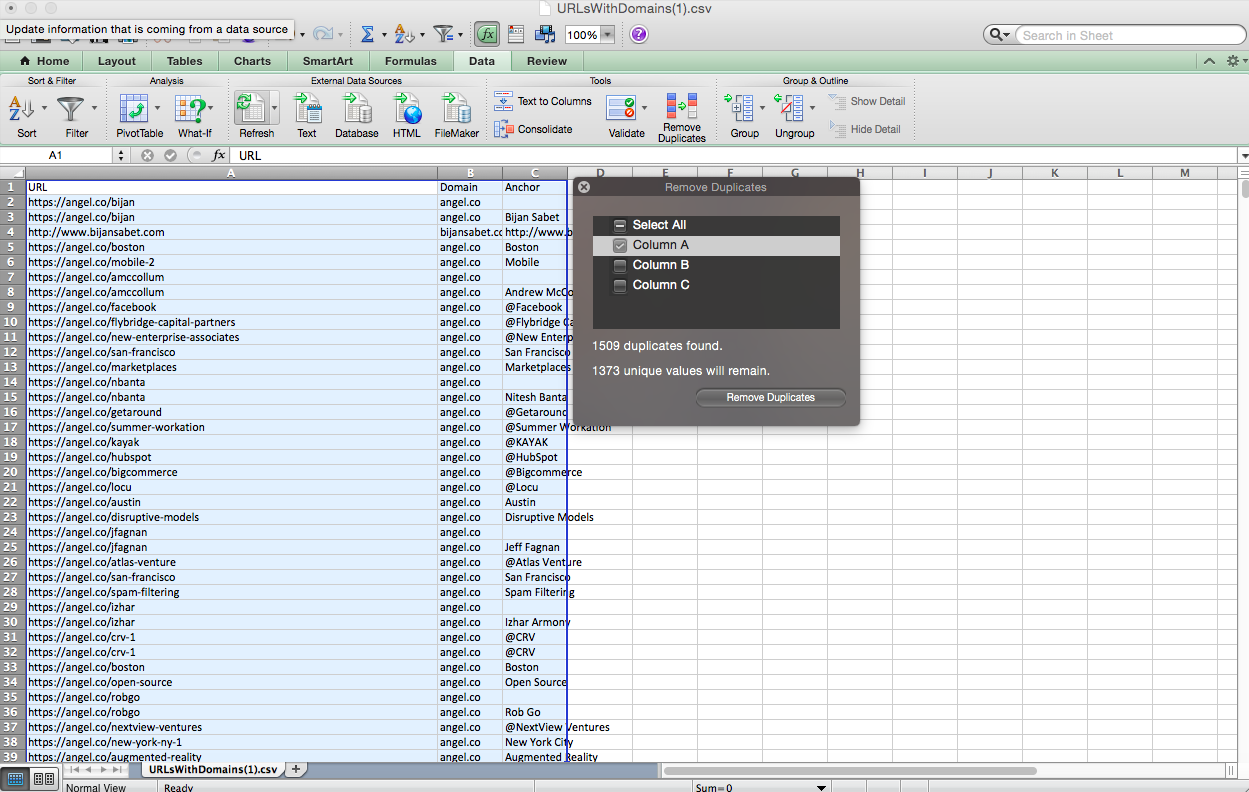

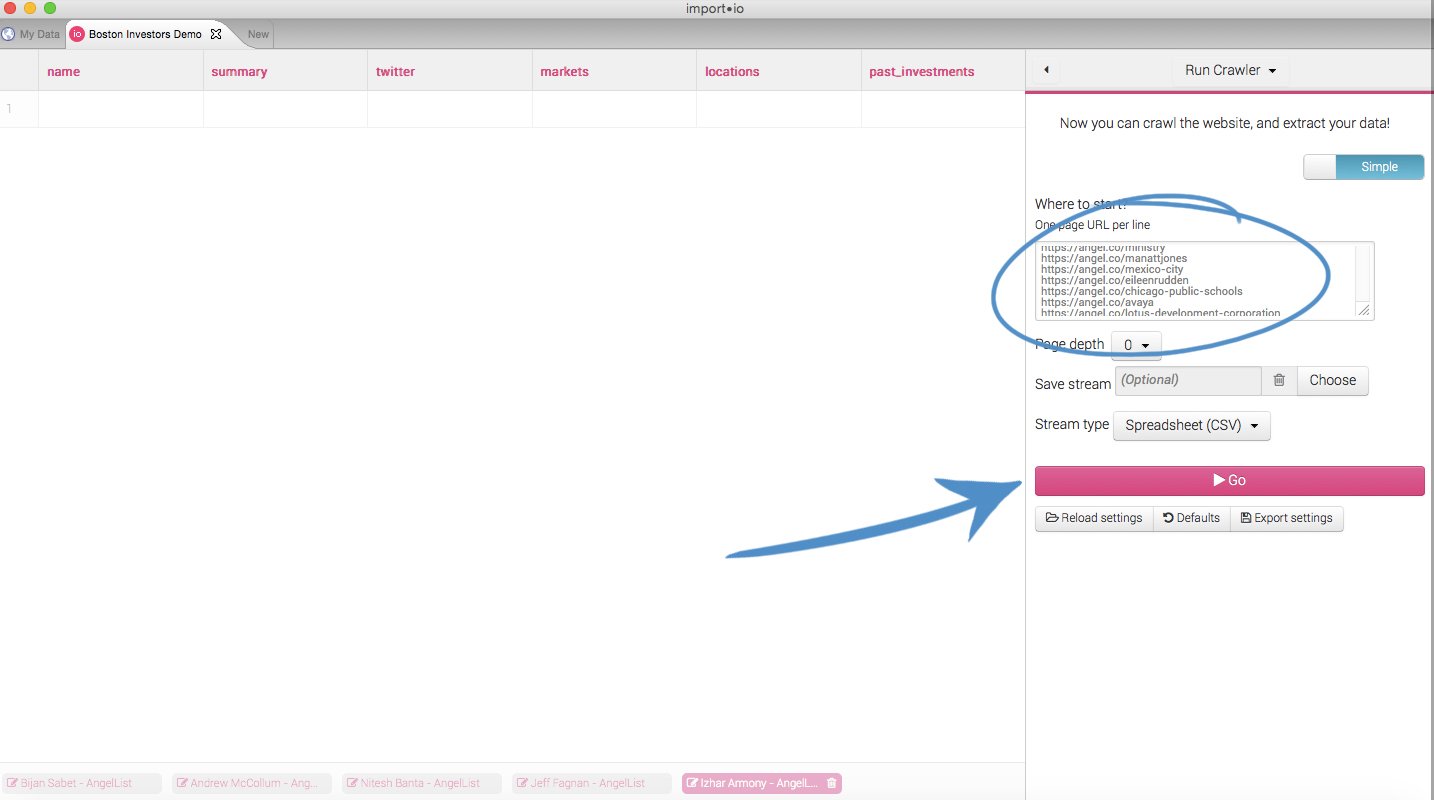

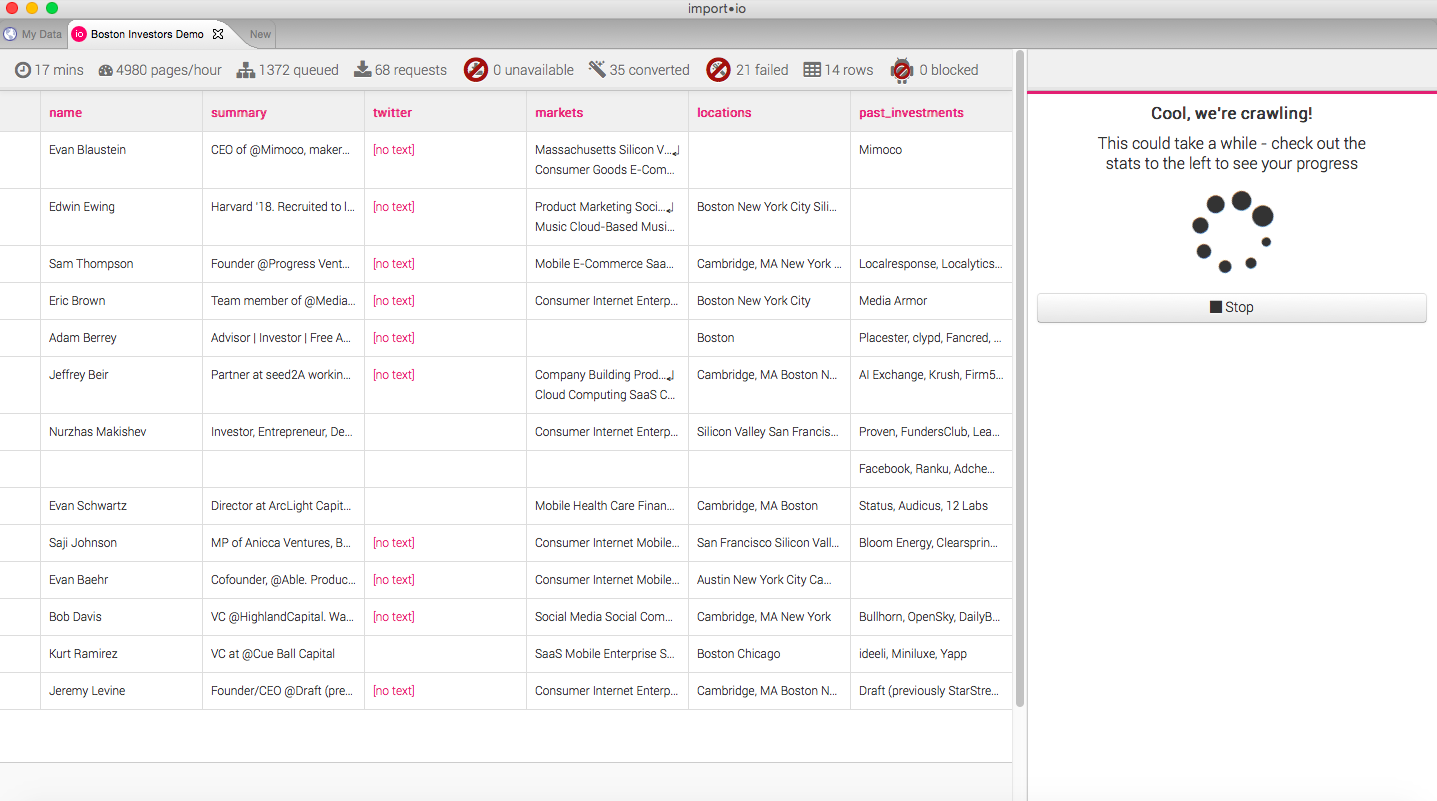

We all know AngelList is the best place to find investors, but who wants to troll through thousands of profile pages to figure out which investors invest in your location / model / industry.

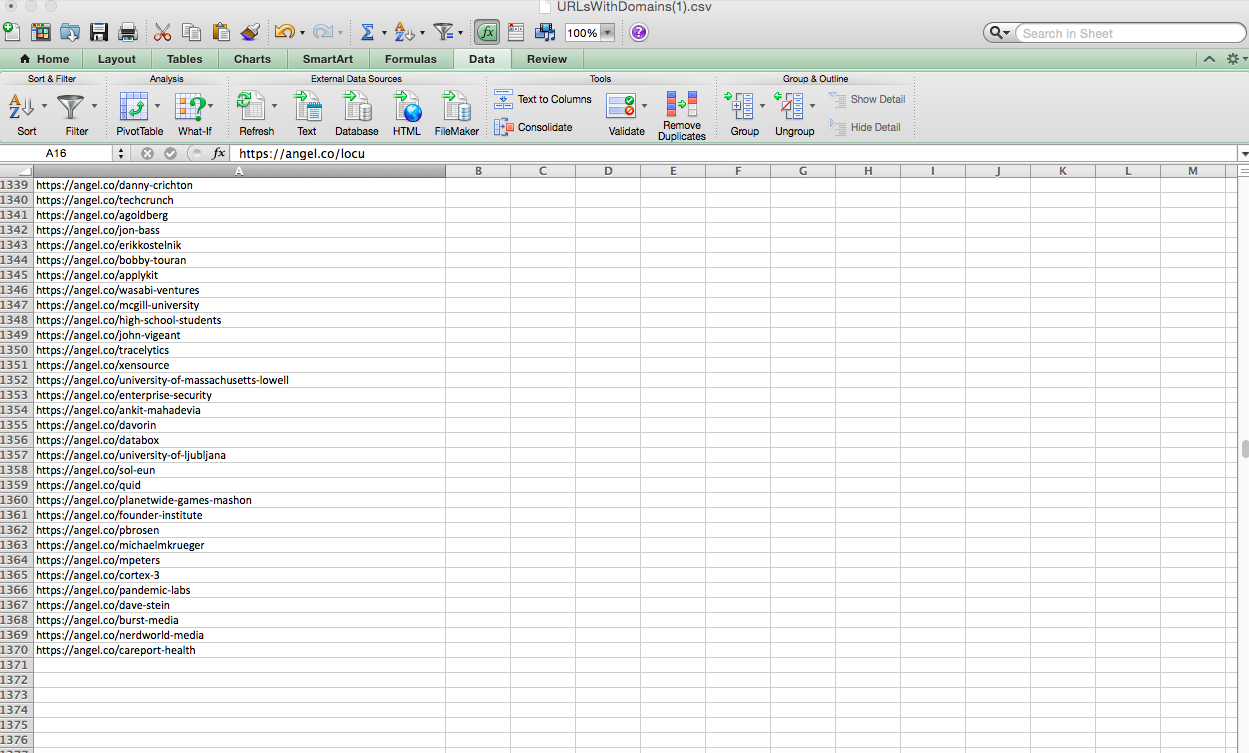

Wouldn't it be easier to just have them all in an excel spreadsheet you could skim through in a few minutes?

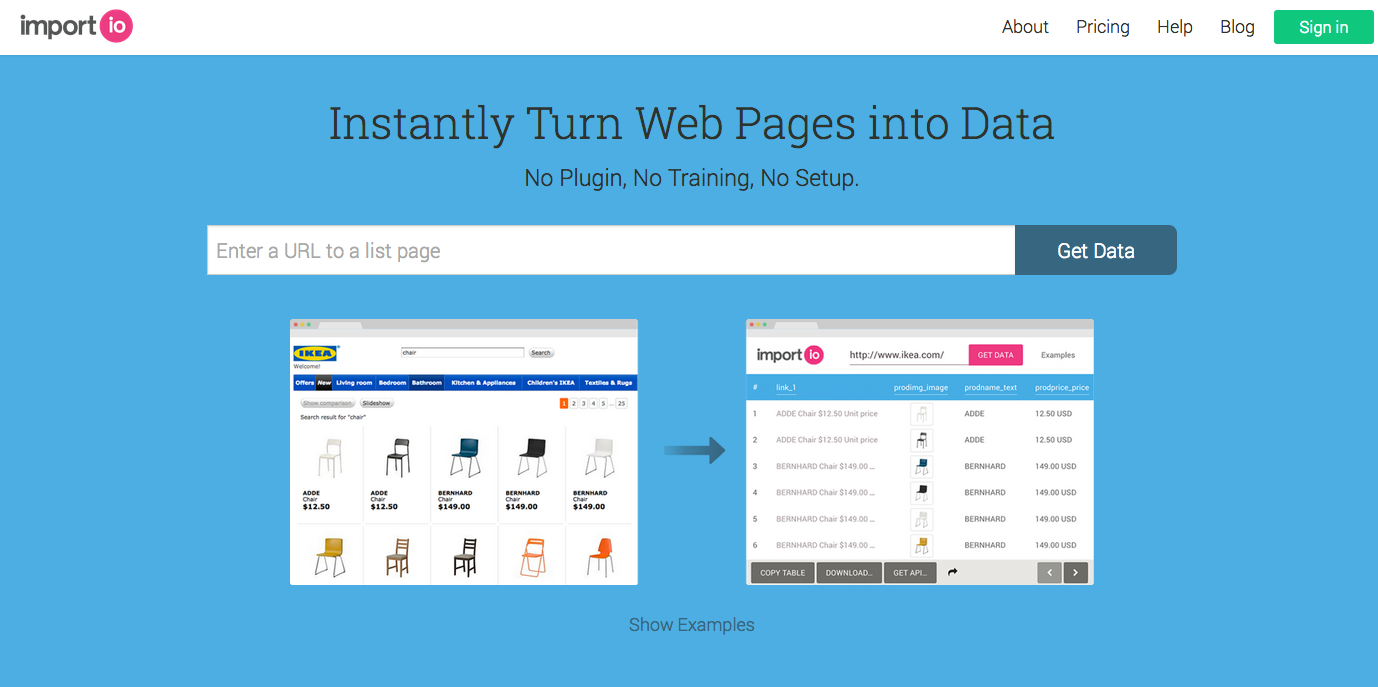

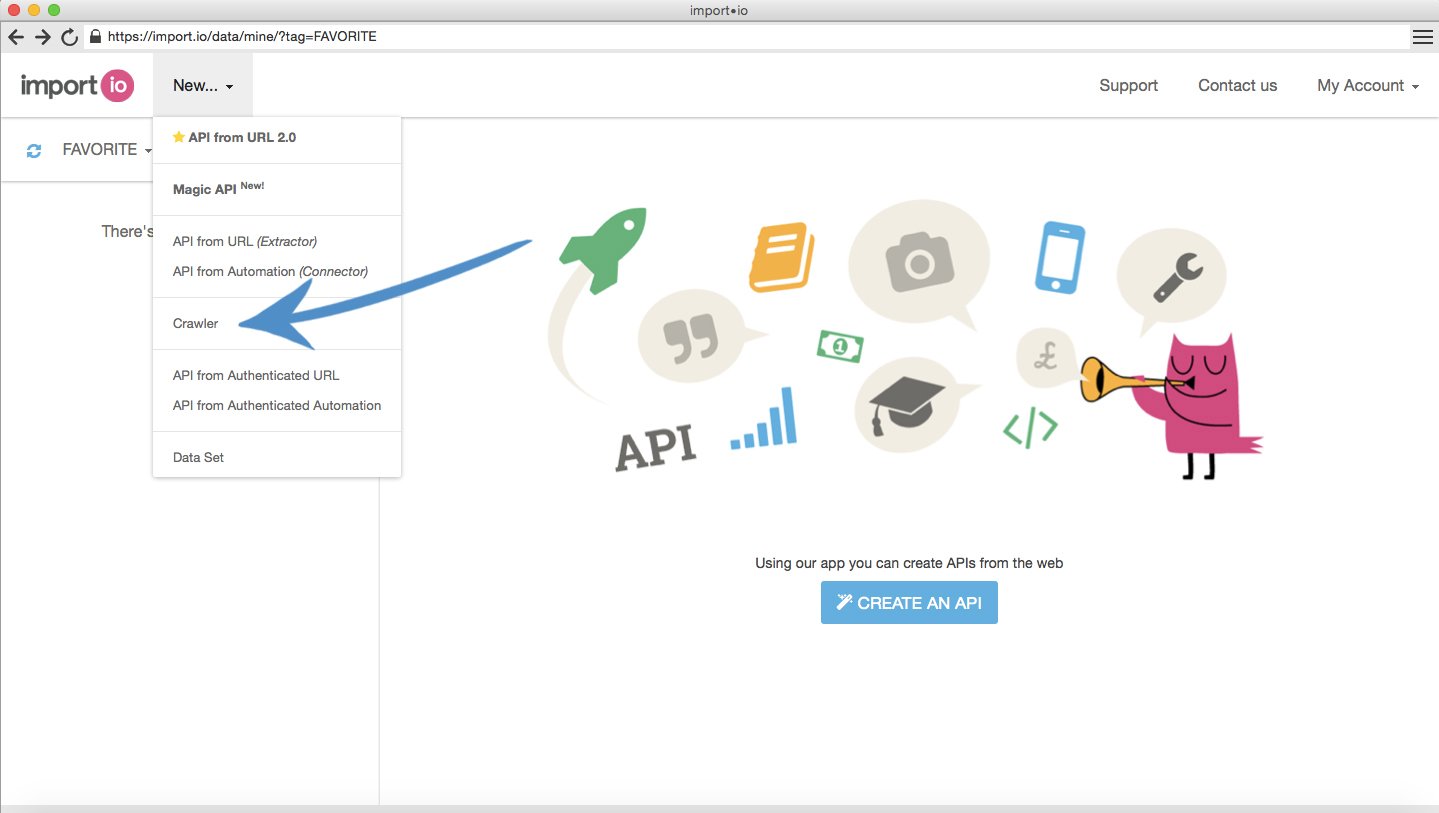

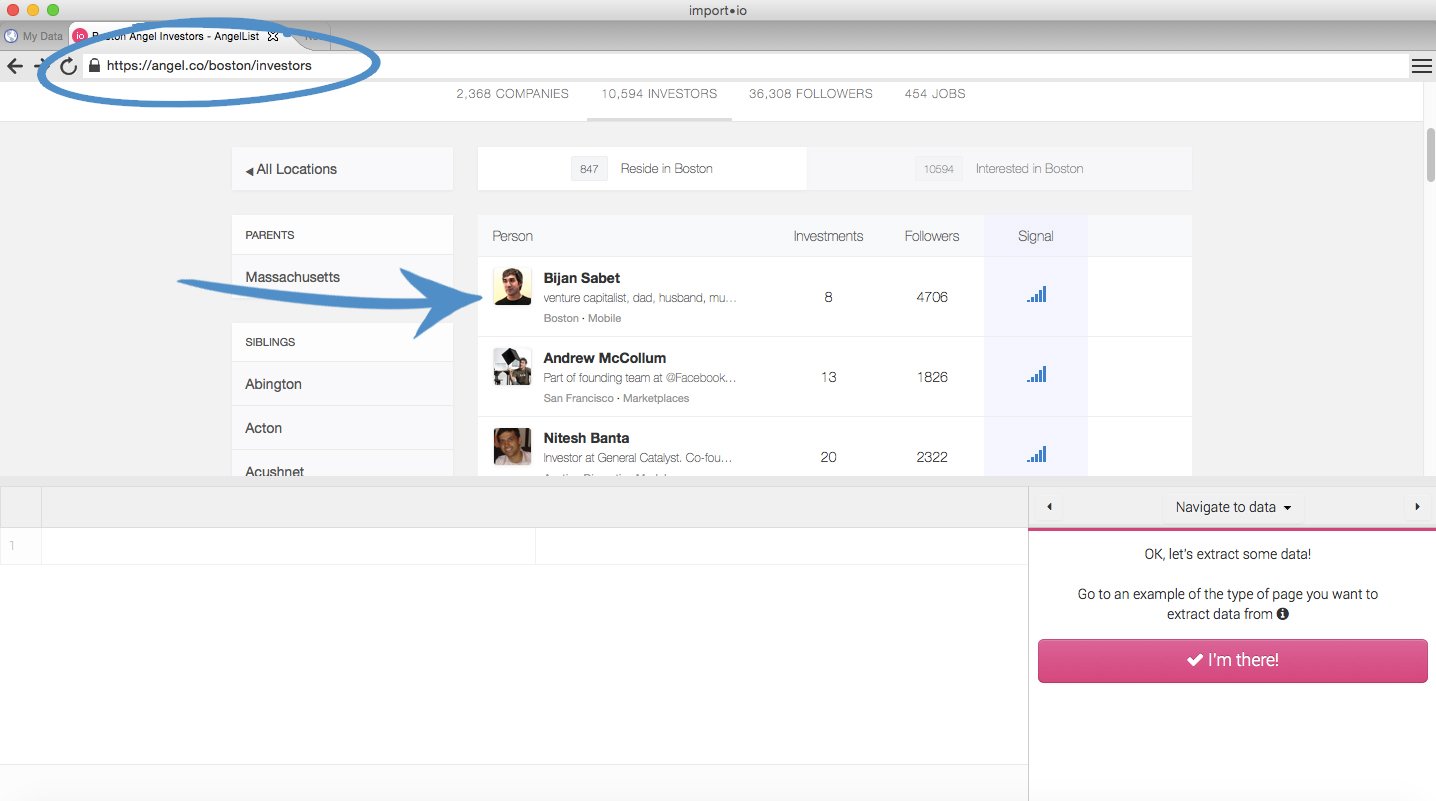

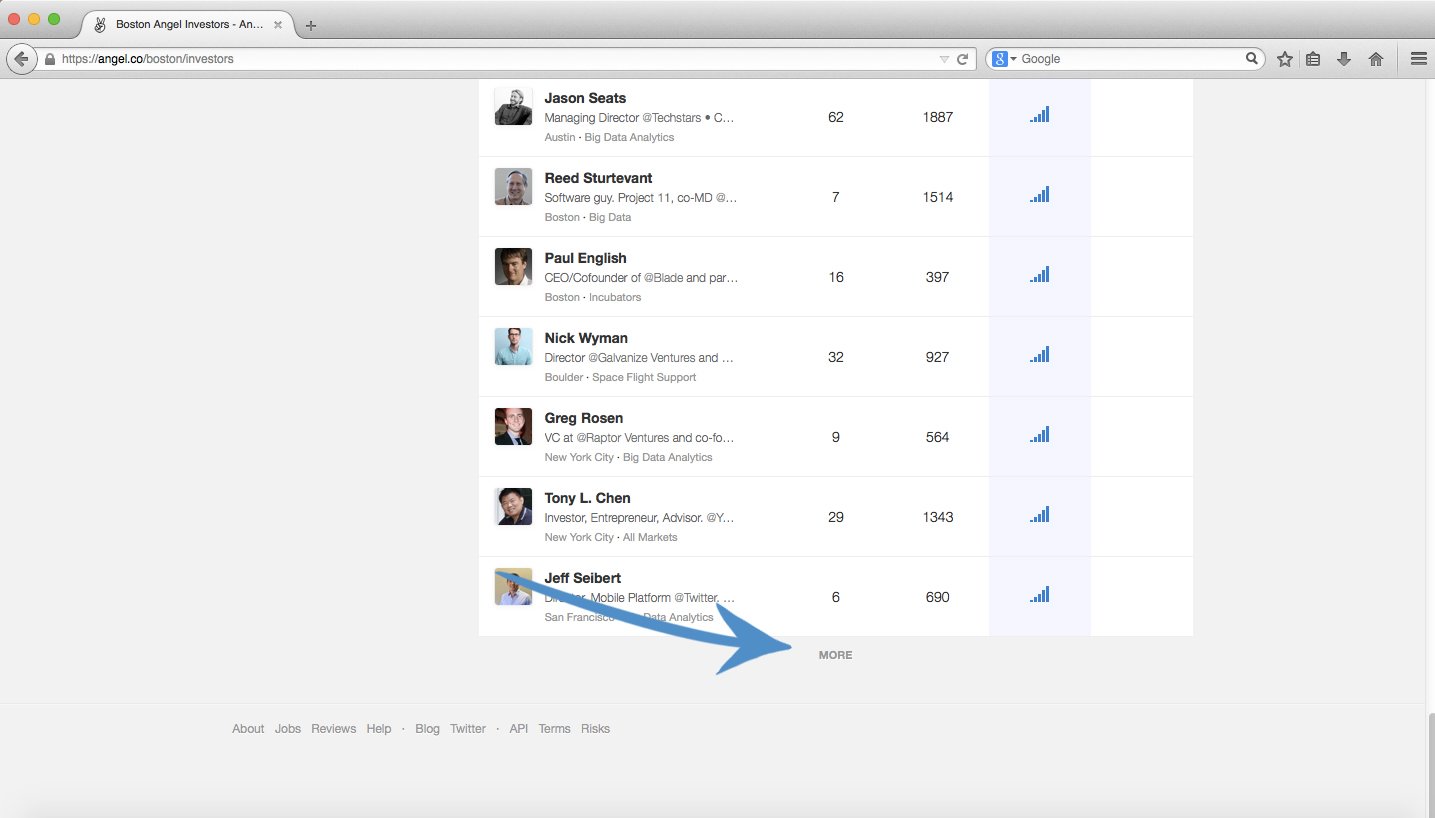

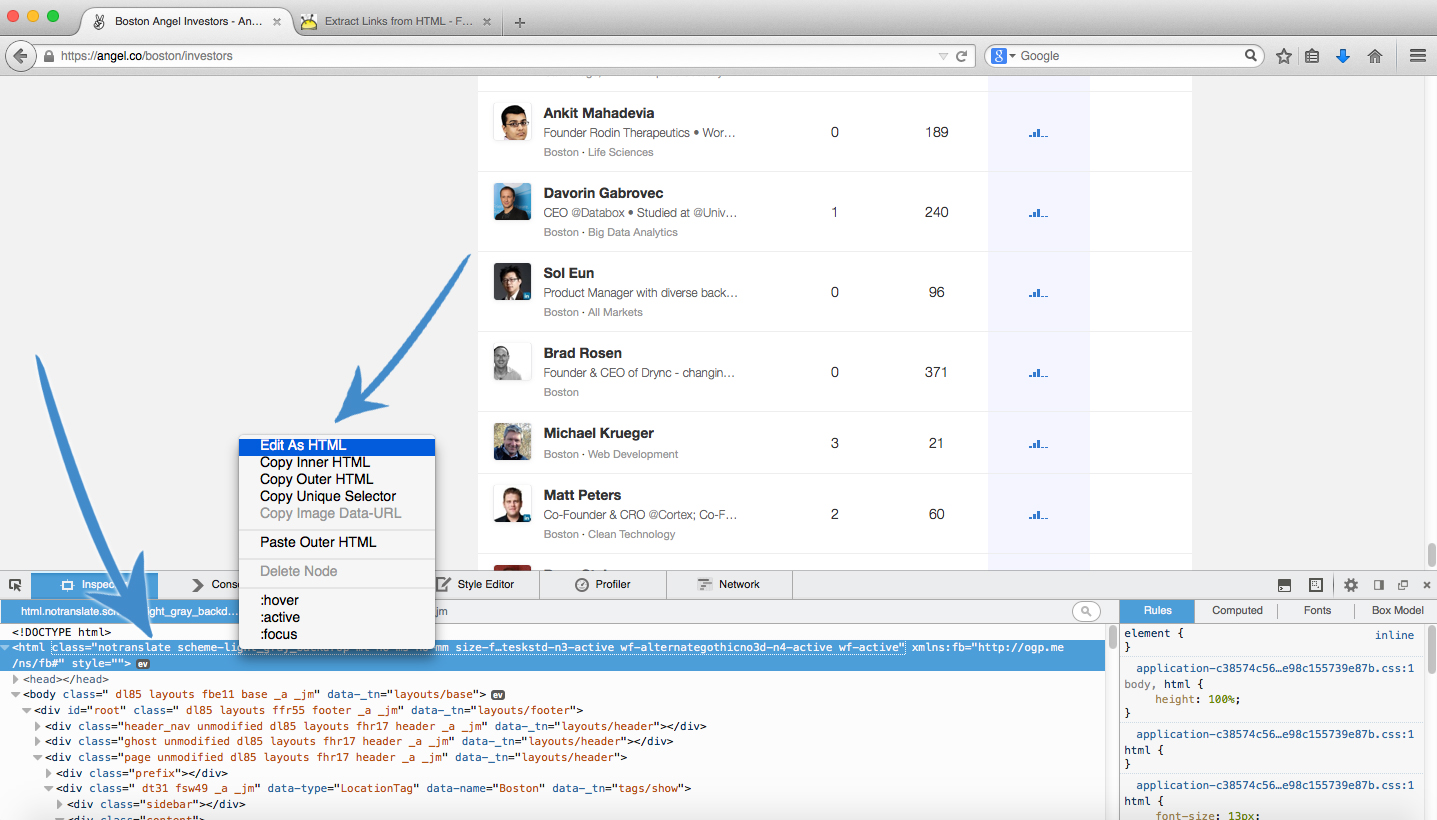

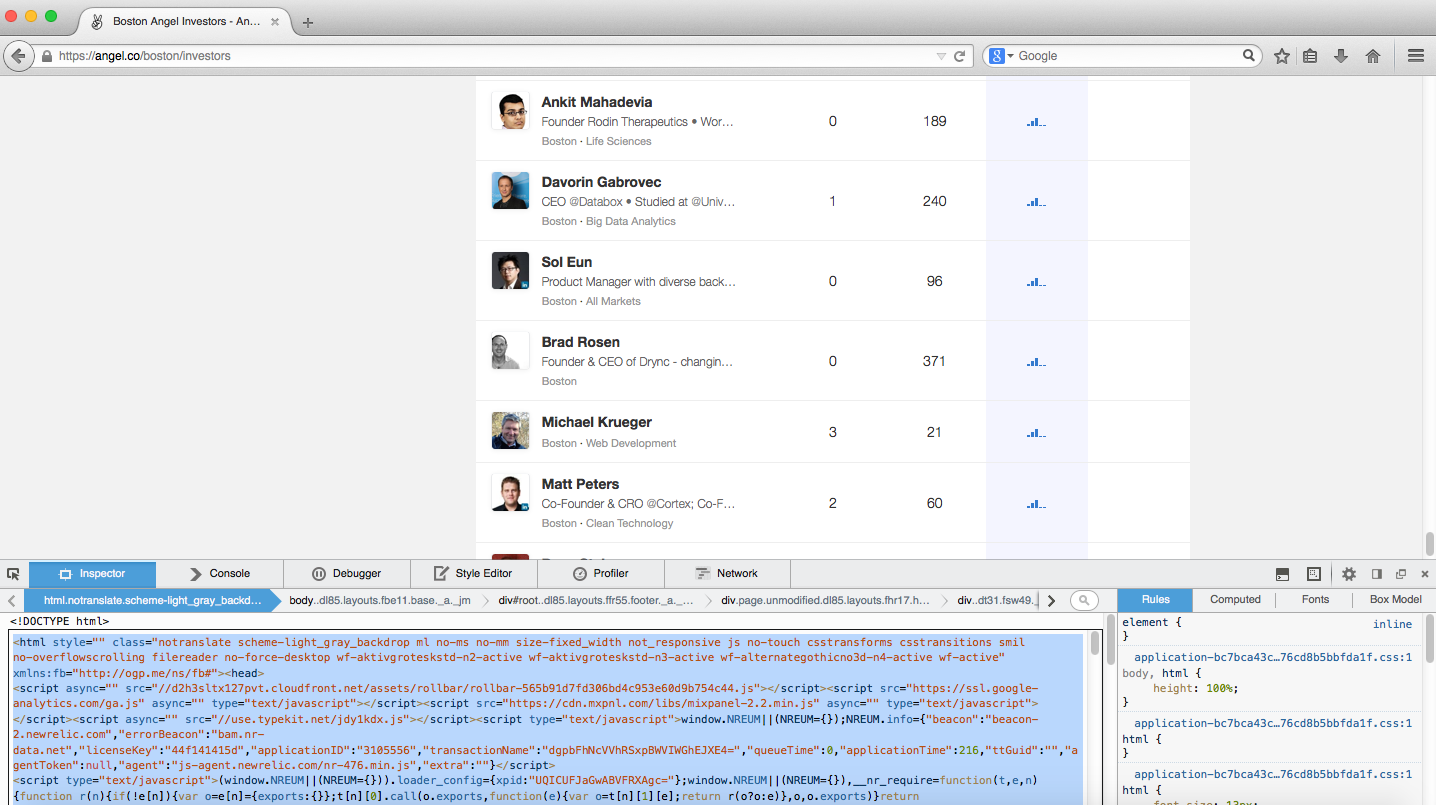

In this checklist, I'm going to show you exactly how to do just that.

Check out step 2 to get started.

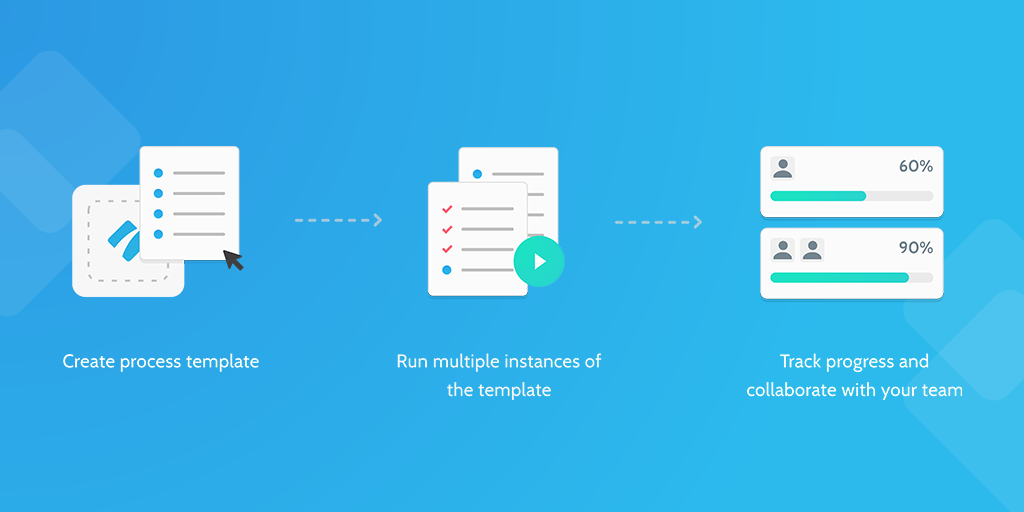

If you want to hand this off to a Virtual Assistant, make sure to create a Process Street account so you can collaborate with them on scraping the whole investor world!