The way customers see it, your software release cycle looks like this:

- Take ages developing the software

- Beta test

- Add a few features, fix a few bugs

- Done!

This isn’t real life. Beta testing is only one type of test your software needs to pass to avoid being a catastrophic failure.

If your software is struggling to get into a usable state, it’s probably because you’re overlooking testing.

Testing is important for two main reasons:

- Tests will reveal flaws in your software

- Tests will reveal flaws in your software development process

Note: Apart from customer-facing and QA tests, tests are code.

So, keep reading to find the types of software testing you need to make mandatory in your development team, as well as an explanation of how to do it.

Unit testing: the first pitfall

When your vacuum cleaner’s blocked, you detach the pipes to find which section the blockage is in. Every time you detach a pipe, you either rule it out as part of the problem, or discover the issue.

That’s exactly what unit testing is.

A unit can be anything from a full feature to a button, and it’s important you make sure you at least have all the units working individually before you can definitively say the software works as a whole.

Unit testing is essential, and done by the development team, every time when reviewing new parts of the code base.

It can’t be done by non-technical teams because it requires intimate knowledge of the code and is white-box testing (e.g. testing inside the software, not as a user).

See the unit test section of our complete testing process for more information.

Integration testing: check if the units work well together

Integration testing moves one level higher than unit testing, and looks at how the units integrate with each other.

For example, after building a unit for archiving checklists, we might find that it breaks the search function. While integration testing, we’d find out which parts of the code clash and fix that during the debugging phase.

As the video above says, the more units in your software, the more integration test cases that creates as you need to test how everything reacts to everything else.

Integration testing is the next logical step up from unit testing, and one step in the software testing process. It’s at this point that two testing tools come into play: stubs (dummy lines of code written to simulate higher functions) and drivers (functions to call other functions):

Suppose you have three different modules: Login, Home, User. Suppose login module is ready for test, but the two minor modules Home and User, which are called by Login module are not ready yet for testing. At this time, we write a piece of dummy code, which simulates the called methods of Home and User. These dummy pieces of code are the stubs.

On the other hand, Drivers are the ones, which are the “calling” programs. Drivers are used in bottom up testing approach. Drivers are dummy code, which is used when the sub modules are ready but the main module is still not ready.

Taking the same example as above. Suppose this time, the User and Home modules are ready, but the Login module is not ready to test. Now since Home and User return values from Login module, so we write a dummy piece of code, which simulates the Login module. This dummy code is then called Driver. — Rahul Yadav

System testing: does the entire piece of software work as intended?

By the time you get to system testing, you need to have completed both unit and integration tests and have the software fully loaded up in a test environment.

Think of it this way: when you build a computer, you test the power supply, motherboard, etc (unit test). You find the motherboard is getting power from the power supply (integration test), and that the entire computer works as a whole (system test). And so, the system test could be the point where the computer explodes because of some previously unknown reaction between components.

System testing is an extremely broad area and has had entire books written about it, but can be broken down quickly into its main ingredients:

- Usability testing: are the user interfaces easy to operate and understand?

- Documentation testing: does the system guide explain the features as they work in real life?

- Functionality testing: does everything behave as expected and described in documentation?

- Inter-operability testing: does the system work with third party systems (different OS, browser, plug-ins, etc.)?

- Performance testing: does the system break itself by trying to use too many resources?

- Scalability testing: is the system affected by user count, user location, or server load?

- Stress testing: what’s the maximum strain the system can take before it breaks?

- Load testing: can the system handle the expected strain?

- Regression testing: do new features break old ones?

- Compliance testing: does the system comply with regulators (e.g. HIPAA)?

- Security testing: are there any security vulnerabilities?

- Recoverability testing: how fast/effectively does the system recover after breakdown?

As software testers, it’s your job to make sure you go through the checklist above and all of its test cases because otherwise you could miss any number of fatal errors.

User acceptance testing: alpha and beta (black box) testing

When unit, integration, and system testing is complete, it’s time to accept you’ve done all you can but likely only brushed the tip of the iceberg in terms of the sheer amount of massive errors you’ve made.

There are some bugs only users can catch because of the near-infinite combinations of units and use cases. That’s why user acceptance testing is important. After all, you’re coding for users, not for yourselves, so it’s vital to get an insight into exactly how users break stuff.

User acceptance testing is done by a select group of users with interest in using the finished product. Since it’s for your own benefit, software companies often let users beta test the software for free, and in turn get their error logs and feedback.

It requires an audience, a way for users to access the software, and a way for them to report errors and feedback. At Process Street, for example, several of our new features will be in beta at any given time.

We collect feedback with Elev.io and handle support tickets with Intercom. In Agile, the goal is to get as many near-perfect features out as quickly as possible, so this often means leaving the last stages of testing to the users on the live server. Otherwise — as a small software business — customers would be waiting a long time to use features that were undergoing testing for just a few rare edge cases.

The process is simple:

- Select a varied sample of power users (different locations, platforms, etc.)

- Invite them to the beta with a key

- Require they submit their feedback

- Iterate on the software based on feedback

For more information on beta testing, check this guide from Fog Creek founder Joel Spolsky.

Tools for automated testing

Unlike manual testing — where humans need to check each use case by hand, and log the outcome — automated testing relies on tools to do the job for you.

For example, frameworks like Selenium will test the cross-browser compatibility of web apps. That’s something that anyone who’s tried to get their app to display properly on Internet Explorer will know the value of.

- Selenium: automated cross-browser regression testing. Selenium is free and open source. It has many premium product spin-offs such as Sauce Labs, which builds on the code to provide extra functionality. Tools built on this framework are a must-use for testing browser compatibility.

- CloudBees: fully automated testing suite from code to production. CloudBees includes unit testing and continuous integration features, and starts from free. Used by Netflix.

- CircleCI: continuous integration platform. Used by Facebook and Spotify, CircleCI provides a full platform for automated testing, amongst other things such as its own build environment and deployment tools.

The benefits of automated testing

As your software expands, you’ll find it’s practically impossible to cover all the bases. That means that considering endless combination of use cases and what introducing a new feature could impact isn’t attainable during the development cycle.

And that’s where automated testing comes in.

Instead of manually testing all eventualities, you write tests or use a testing tool to figure it out for you.

Automated testing frees your dev team from the dreary weeks of testing that follow building a feature, and gets them motivated to start work on the next one instead of fussing about previous work.

It’s more efficient, delivers higher quality software, and decreases costs.

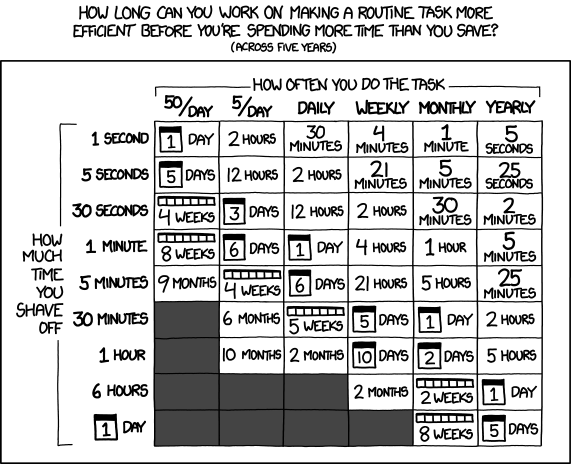

Think of it in a similar way to setting up automation. It takes time to set the automation up, but saves a lot more time in the future:

As you can see from the graph above, if you usually do 5 tests a day and each test takes 30 minutes, you gain 6 months of extra time over the course of 5 years! Imagine if your team had that kind of freedom.

Managing software testing with Process Street

No complex task should ever be taught or executed without documentation. That’s because humans are extremely error prone, as much as we think we’re not.

For that reason, we’ve put together a few checklists for development teams, including:

While we have a ton of pre-made processes, Process Street is essentially a blank slate, so you’re able to get a free account, type up your own testing process, and then track its completion with your team.

Get a free account today and start improving the speed and quality of your software testing

Benjamin Brandall

Benjamin Brandall is a content marketer at Process Street.