No matter how foolproof you think your operations are, human error will always pose a threat. Heck, it’s already responsible for 52% of security and data breaches, was the root cause of a host of famous tragedies, and can strike at any time.

But what exactly is human error, and how can we limit its effects if it can’t be completely prevented?

To answer that question, we here at Process Street have broken it down for you. In this article, you’ll learn:

- The four types of human error

- How the different types of human error are caused

- The single technique to combat each type in your business

It’s time to stop leaving your success open to random chances of failure.

What is human error?

When working with machines there is a certain amount of predictable error. This could be due to a part wearing out after a set number of cycles or the technology itself being incapable of performing a task to the ideal level of accuracy.

The mistake isn’t a conscious decision – it’s an unintentional fault that is an inherent part of the system carrying out the tasks it’s given. This is much the same with human error.

“Human error” is any mistake or action performed by a human which results in the thing they’re doing to be wrong. This mistake is unintentional, as otherwise it is a deliberate violation of policy – human error doesn’t account for intentional mistakes or negligence.

It’s the human equivalent of the margin of error that comes with machinery.

Unfortunately, this human element makes it much harder to predict, prevent, and even detect human errors as opposed to machines. People perform at different capacities and far from consistently, so the risk of human error can never truly be eliminated.

However, with the tips in this post you can learn how to identify human error, find the source of it, and make sure that it doesn’t become normalized.

Types of human error

It’s difficult (if not almost impossible) to effectively tackle human error without being able to see the cause of it. At the heart of this is the ability to categorize the errors you encounter, as this will allow you to give the appropriate response.

Widely speaking, human error can be separated into two categories; action and thinking errors. Each of these, in turn, can be split into two sub-categories.

I’ll go into detail about each category below, but it’s first worth noting that human errors can be incredibly difficult to identify, as several categories of error often appear at the same time. For example, don’t be quick to judge the cause of a problem as an action error when there could also be a contributing thinking error.

Also, while some identify another category as “violations” (errors cause due to intentional negligence), these are more of a disciplinary problem, as thus aren’t particularly relevant to our view. Violations are avoidable through policy, accountability, and enforcement, whereas unintentional human errors are much harder to deal with, and thus are important to focus on separately.

Action errors

Action errors relate to times where actions aren’t performed as planned, usually because the person responsible is familiar with the process and relies more on instinct than conscious thought. The key here is that the action is flawed as opposed to the plan or thought behind it.

In other words, action errors are when you’re unintentionally not doing what you’re supposed to.

The two types of action errors are:

- Slips – a frequently performed action is done wrong or is carried out on the wrong object

- Lapses – a step is forgotten (a “lapse” in short-term memory)

For example, a “slip” could be filling a car with petrol instead of diesel, as you’re performing the correct action but with the wrong fuel. A “lapse” would be forgetting to replace your fuel cap before driving off once you’re finished.

Note that in both of these cases the issue isn’t with not knowing what to do – it’s more of a case of taking your actions for granted and forgetting a common task as a result.

Thinking errors

Thinking errors, as you might imagine, are the opposite. They’re situations where the intended actions are wrong rather than the way they’re carried out. Here, an incorrect or flawed plan of action is the cause of the error as opposed to the action itself.

Basically, thinking errors are when you correctly carry out the wrong task (again, unintentionally) due to something like missing knowledge or unclear instructions.

The two types of thinking errors are:

- Rule-based mistakes – a good rule/method is applied in the wrong way or a bad rule is followed (when rules/procedures are available)

- Knowledge-based mistakes – applying incorrect logic, resources, or lacking experience in a situation where no rules are available

So, a “rule-based” mistake might be filling your car with $30 of gas for the week, but not taking into account that fuel prices have risen, giving you less fuel for the same cost. A “knowledge-based” mistake would be refueling a farm-only tractor with regular diesel instead of red diesel, due to not knowing about the alternative, cheaper fuel.

Again, the fault here isn’t with how the tasks involved are being performed, but instead with the process that led to you doing them.

Why it’s dangerous

It would be wonderful to say that human error only ever results in minor problems, but this just isn’t the case.

Every system, company, and process — no matter how well documented or seemingly foolproof — is vulnerable to human error. While most errors result in minor hits to efficiency or go unnoticed, it just takes the right combination of mistakes to cause truly catastrophic events.

Alternatively, the error might be so minor and infrequent that the cause goes ignored or undiagnosed. The trouble with these errors is that they are often repeated and build up to a huge flaw over time and scale.

To prove my point, here are a few examples of just how much damage human error can do.

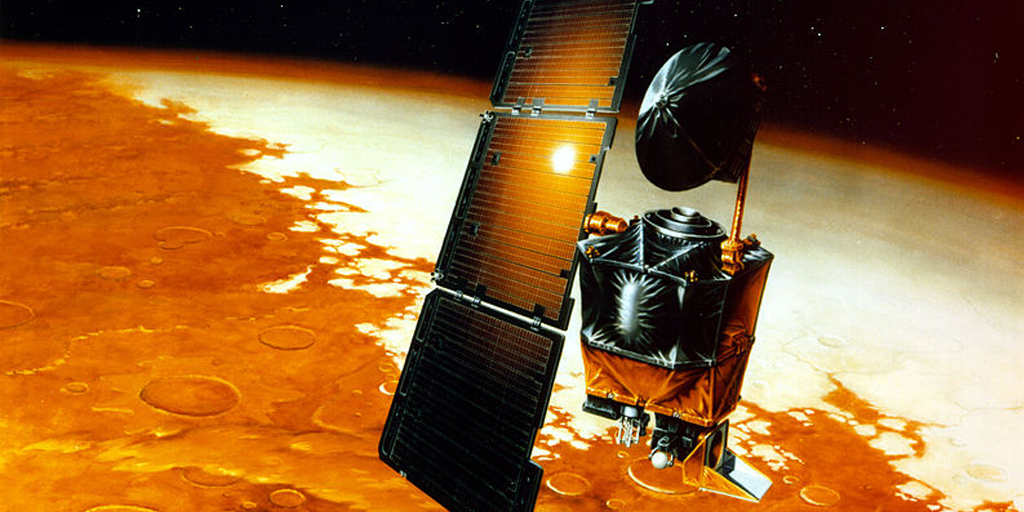

$193 million and countless hours of work disintegrated with the Mars Climate Orbiter

Human error can strike anywhere and at any time. In fact, slips are potentially more common in experienced people, as they tend to be overly certain of their expertise and actions to the point where it’s easy to perform an action wrong without thinking about it.

That’s exactly what happened with NASA and the Mars Climate Orbiter (MCO) — not even rocket scientists are immune to human error.

The problem lay in failing to make sure that the units used in all of their calculations, tests, and so on, were in either imperial or metric units. Thus, when the MCO reached Mars, the programmed trajectory led to it getting far too close to the planet and burning up instead of maintaining a steady orbit.

While it’s uncertain as to whether NASA’s processes lacked a step to check that the units were consistent or whether that step wasn’t followed, the result is the same. A single, consistent human error ruined the entire (incredibly expensive) program.

Seven dead crew members and a lot of public coverage made the Challenger Shuttle a national tragedy

The Challenger shuttle disaster serves as a solemn reminder of how dire both the normalization of deviance and consistent human error can be.

The issue here was that the same knowledge-based mistake was made repeatedly by the teams working on the Challenger launch, to the point where the deviance from the expected test results became normal. The risk involved was deemed to be within acceptable levels.

Usually, this wouldn’t be an issue. However, the consistency of the deviance and context of the test results when combined should have warranted greater scrutiny on the issue in question (the joints on the Solid Rocket Boosters, or SRBs).

No such scrutiny was applied, and the compounded levels of “acceptable” risk showed their teeth at the launch where the shuttle disintegrated, beginning with a joint in its right SRB. While it’s true that other factors also contributed (eg, the cold weather), this consistent human error undoubtedly played a large part in the event.

It’s far from pleasant, but the Challenger serves as a very real (and, to this day, painful) reminder of what can happen when human error goes unchecked.

£150 million to repair 300,000 vehicles refueled incorrectly every year in the UK

As for a slightly less morbid example of human error, it’s been estimated that around 300,000 vehicles are filled with the wrong fuel every year in the UK, costing at least £150 million in repairs.

This is a widespread example of a slip – it’s incredibly easy to do, as refueling a car is a process that many (myself included) take for granted. Whether you pick up the wrong fuel nozzle or buy a new car and don’t consider that change when filling up, it’s a lot of time, money, and wasted effort in service of correcting such a simple error.

Checklists stop human error in its tracks

As I’ve already said, it’s impossible to completely eliminate human error. Short of completely removing the human elements of a given process, people will always be there and at risk of making mistakes. Heck, even if there aren’t people involved, you’ll still have to factor in a certain degree of error based on the machinery or software you use.

However, there are ways to severely limit the risk and effect of human error; chief of which is by documenting your processes and following checklists to complete your tasks.

“We are all plagued by failures—by missed subtleties, overlooked knowledge, and outright errors…

We are not in the habit of thinking the way the army pilots did as they looked upon their shiny new Model 299 bomber—a machine so complex no one was sure human beings could fly it.

… they chose to accept their fallibilities. They recognized the simplicity and power of using a checklist.

And so can we. Indeed, against the complexity of the world, we must. There is no other choice.” – Atul Gawande, The Checklist Manifesto, p.46

I’ll break down each reason why below, but to summarize:

- Checklists break down “impossible” tasks into bitesize chunks

- Following basic task lists prevents lapses in memory

- Detailed instructions help to limit slip-ups

- Continuous improvement means that rules-based mistakes should only happen once

- Knowledge-based mistakes become rarer the more often a checklist is used

Checklists break down “impossible” tasks

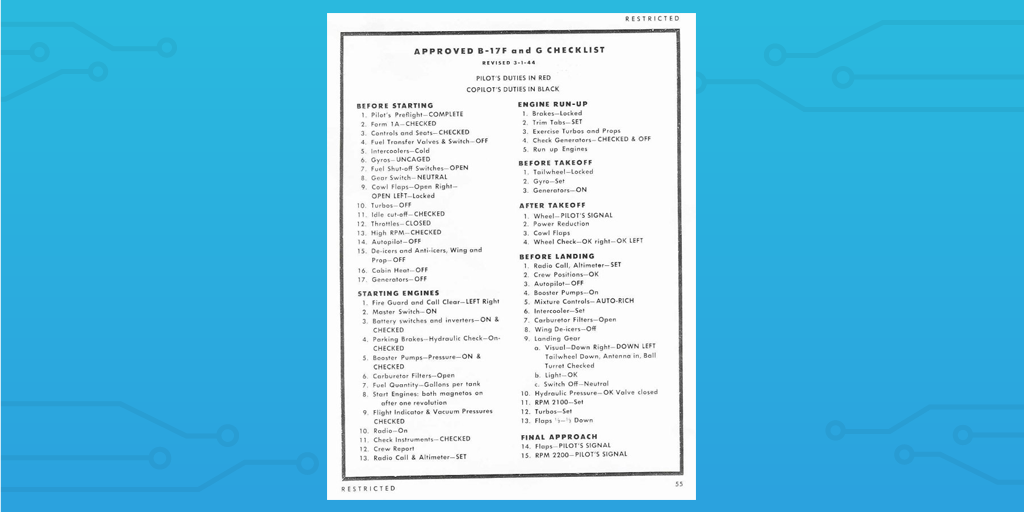

In 1935, Boeing made the Model 299 in response to a request for new designs from the army. This technological marvel was later dubbed the B-17 or the “flying fortress”. Capable of holding five times the requested bomb load, and flying faster and further than previous models, on paper it was the superior choice by far.

That is until two of the best pilots available performed the test flight and died in the resulting crash.

The B-17’s controls turned out to be so complex that even the highly experienced Major Ployer Peter Hill forgot to release the control lock, thus causing the fatal crash. In other words, the bomber was fantastic, but useless in most cases as there were too many elements for even veteran pilots to successfully manage.

That’s when Boeing created the first documented pre-flight checklist.

Instead of relying on memory and leaving the process vulnerable to human error, pilots now had a set list of items to check at various stages of flight. This not only made it possible to fly the B-17 without severe risk but also allowed much less experienced pilots a chance to take on the task without trouble.

This is just one example of how having a checklist template helps to reduce human error. In the case of processes which are complex enough to almost force human error to occur, they’re the only thing that can make those duties both possible and palatable.

Basic task lists prevent lapses

The story of the first pre-flight checklist also serves as a great demonstration of the ability of checklists to prevent lapses in memory (so long as they are actually followed).

Aside from reading a checklist and then forgetting the instructions in the time it takes to perform them, there’s no realistic cause for human error in the form of a lapse in memory. The task list is documented in black and white and (in most checklists) your progress is recorded as you go.

It’s physically impossible to forget a step unintentionally in this case, as each task has to be read and marked as complete.

Detailed instructions can be given to stop slips

Slips are usually the result of someone becoming so familiar with a process that they take a step for granted and unintentionally perform a task incorrectly. This is another situation where having a checklist truly shines.

Much like with how lapses are prevented by having a task list to read through and follow, slips can be nullified by giving instructions for each step. This lets each item have a specified method of completion which ensures that it’s carried out in the correct way and to a satisfactory standard.

For example, going back to the case of refueling a car, the instructions could specify to make sure that you fill up with the correct type of fuel. Even with such a small tweak, this would help to drastically limit the number of people who fill up with the wrong type of fuel.

Rules-based mistakes should only ever happen once

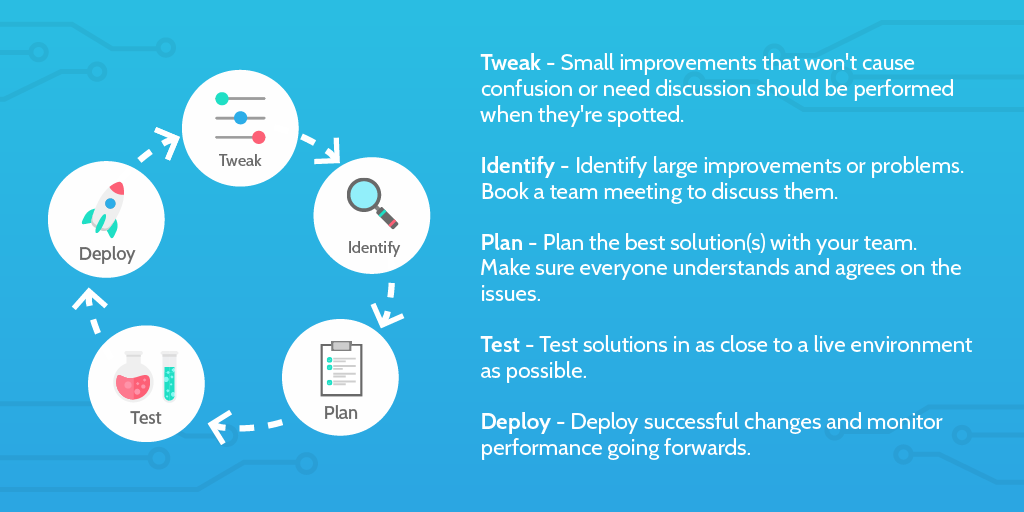

Continuous improvement can be difficult to maintain, but the basic principle lies in tweaking your processes to account for any mistake or flaw that appears. In this way, any human error due to a rule-based mistake should only happen once before being corrected.

Admittedly, it can be difficult to diagnose rules-based mistakes, especially if you’ve been using the same checklist for a long time. That’s why it’s best to meet with everyone involved in the process and go through it with them step by step, asking their opinions and whether they think anything could be improved.

For more tips on creating and deploying this kind of program, check out our posts on change management models and continuous improvement.

Knowledge-based mistakes become rarer the more a checklist is used

In much the same way that checklists limit rules-based mistakes, knowledge-based human errors only occur when a situation arises that the checklist doesn’t account for.

Let’s say that you have a client onboarding checklist, but someone signs up through a new avenue which your process doesn’t account for. This means that any response will have to be improvised, which could lead to a sub-par onboarding experience.

The way to solve this issue is to edit the process template to account for the new situation while working out a solution to the problem. That way (even if it’s a one-time fringe case) your process can prevent the same knowledge-based error from happening twice.

Even if the solution you create isn’t correct, the error technically moves on to become a rules-based error which can be ironed out with due iteration. Either way, your knowledge-based error has been solved.

Human error is impossible to eliminate, but checklists get darn close

Where there are humans, there will be human error – it’s impossible to eliminate it entirely. However, using checklists to document and guide your tasks is a great way to limit the chance of human error becoming a real problem.

Sure, people can still ignore the checklist or misread instructions. Rule-based and knowledge-based errors still occur, even if only once per error. It’s still a much more preferable alternative to relying on memory to complete tasks and limiting what can be done according to the length and complexity of the task.

If you’d like to limit human error and document your processes with the most effective process documentation software on the market, try out Process Street. Not only can you record and perfect your processes with richly detailed tasks, but you can easily assign team members to specific tasks and checklists, control user permissions to limit visibility, use business process automation to complete tasks you don’t want to do manually, and much, much more.

Sign up for a free account today!

How do you tackle human error? What mistakes do you have trouble correcting? I’d love to hear from you in the comments!

Ben Mulholland

Ben Mulholland is an Editor at Process Street, and winds down with a casual article or two on Mulholland Writing. Find him on Twitter here.